However, I recently met other open source developers that make a living from donations, and they helped widen my perspective. At Amsterdam.js, I heard Henry Zhu speak about sustainability in the Babel project and beyond, and it was a pretty dire picture. Later, over breakfast, Henry and I had a deeper conversation on this topic. In Amsterdam I also met up with Titus, who maintains the Unified project full-time. Meeting with these people I confirmed my belief in the donation model for sustainability. It works. But, what really stood out to me was the question: is it fair?

I decided to collect data from OpenCollective and GitHub, and take a more scientific sample of the situation. The results I found were shocking: there were two clearly sustainable open source projects, but the majority (more than 80%) of projects that we usually consider sustainable are actually receiving income below industry standards or even below the poverty threshold.

What the data says

I picked popular open source projects from OpenCollective, and selected the yearly income data from each. Then I looked up their GitHub repositories, to measure the count of stars, and how many “full-time” contributors they have had in the past 12 months. Sometimes I also looked up the Patreon pages for those few maintainers that had one, and added that data to the yearly income for the project. For instance, it is obvious that Evan You gets money on Patreon to work on Vue.js. These data points allowed me to measure: project popularity (a proportional indicator of the number of users), yearly revenue for the whole team, and team size.

[…]

Those that work full-time sometimes complement their income with savings or by living in a country with lower costs of living, or both (Sindre Sorhus).

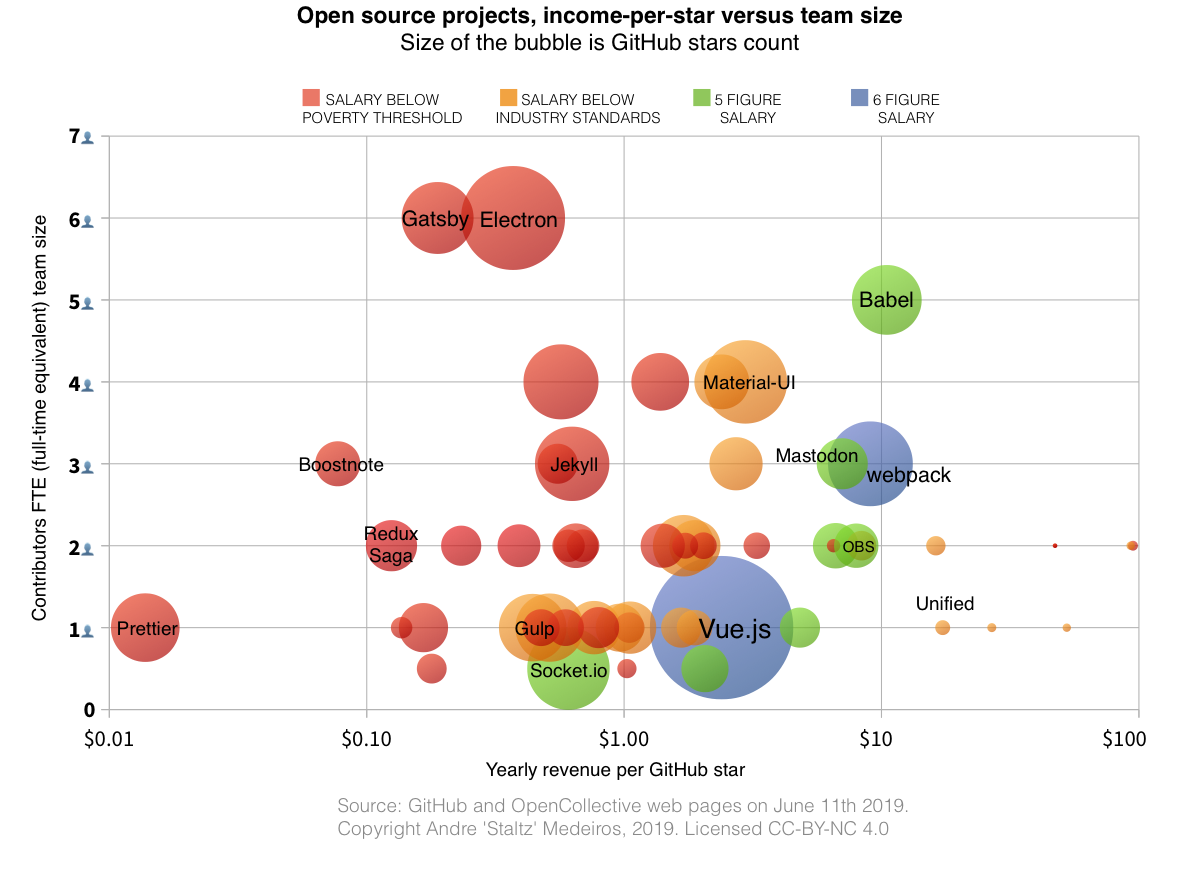

Then, based on the latest StackOverflow developer survey, we know that the low end of developer salaries is around $40k, while the high end of developer salaries is above $100k. That range depicts the industry standard for developers, given their status as knowledge workers, many of which are living in OECD countries. This allowed me to classify the results into four categories:

- BLUE: 6-figure salary

- GREEN: 5-figure salary within industry standards

- ORANGE: 5-figure salary below our industry standards

- RED: salary below the official US poverty threshold

The first chart, below, shows team size and “price” for each GitHub star.

More than 50% of projects are red: they cannot sustain their maintainers above the poverty line. 31% of the projects are orange, consisting of developers willing to work for a salary that would be considered unacceptable in our industry. 12% are green, and only 3% are blue: Webpack and Vue.js. Income per GitHub star is important: sustainable projects generally have above $2/star. The median value, however, is $1.22/star. Team size is also important for sustainability: the smaller the team, the more likely it can sustain its maintainers.

The median donation per year is $217, which is substantial when understood on an individual level, but in reality includes sponsorship from companies that are doing this also for their own marketing purposes.

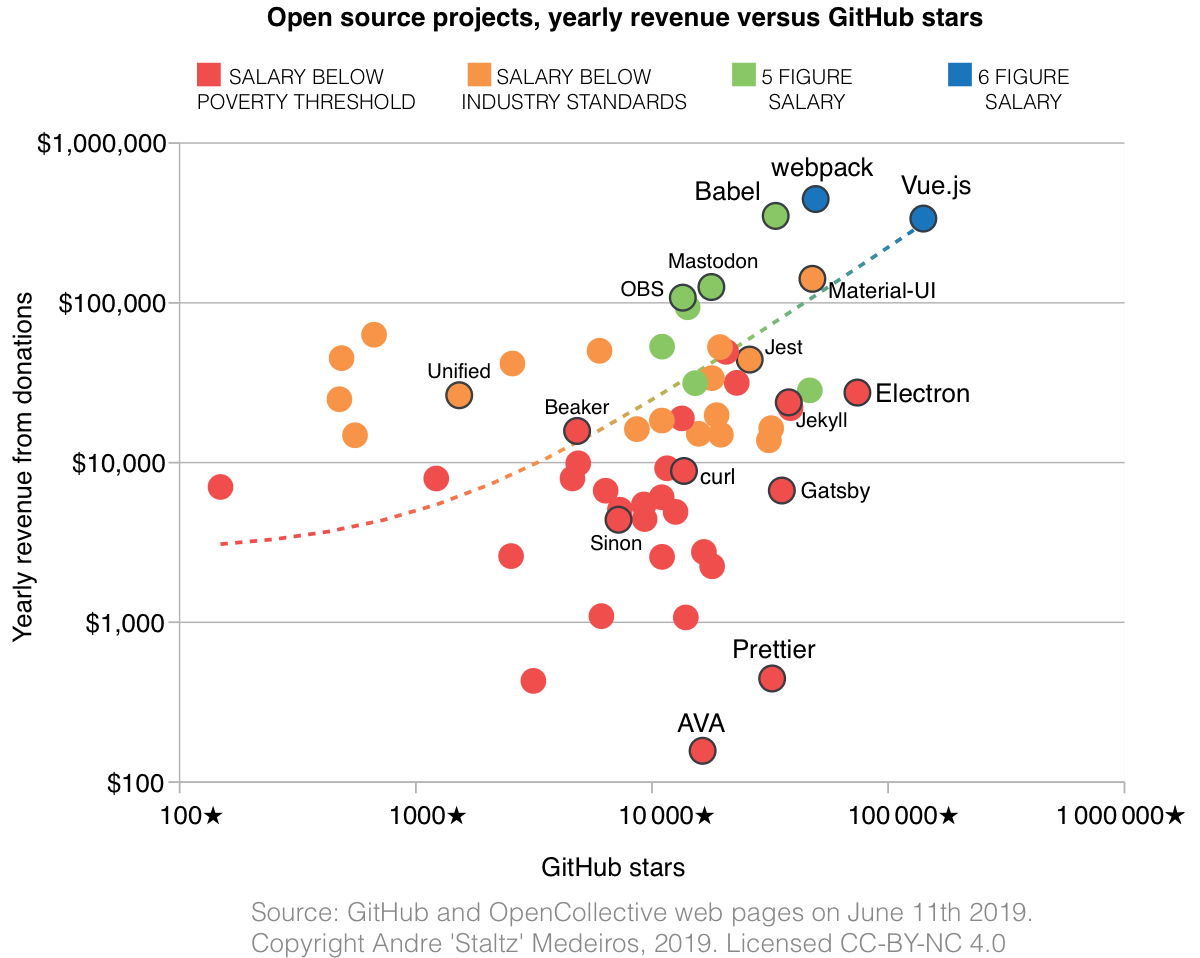

The next chart shows how revenue scales with popularity.

You can browse the data yourself by accessing this Dat archive with a LibreOffice Calc spreadsheet:

dat://bf7b912fff1e64a52b803444d871433c5946c990ae51f2044056bf6f9655ecbf[...]The total amount of money being put into open source is not enough for all the maintainers. If we add up all of the yearly revenue from those projects in this data set, it’s $2.5 million. The median salary is approximately $9k, which is below the poverty line. If split up that money evenly, that’s roughly $22k, which is still below industry standards.

The core problem is not that open source projects are not sharing the money received. The problem is that, in total numbers, open source is not getting enough money. $2.5 million is not enough. To put this number into perspective, startups get typically much more than that.

Tidelift has received $40 million in funding, to “help open source creators and maintainers get fairly compensated for their work” (quote). They have a team of 27 people, some of them ex-employees from large companies (such as Google and GitHub). They probably don’t receive the lower tier of salaries. Yet, many of the open source projects they showcase on their website are below poverty line regarding income from donations.

[…]

GitHub was bought by Microsoft for $7.5 billion. To make that quantity easier to grok, the amount of money Microsoft paid to acquire GitHub – the company – is more than 3000x what the open source community is getting yearly. In other words, if the open source community saved up every penny of the money they ever received, after a couple thousand years they could perhaps have enough money to buy GitHub jointly.

[…]

If Microsoft GitHub is serious about helping fund open source, they should put their money where their mouth is: donate at least $1 billion to open source projects. Even a mere $1.5 million per year would be enough to make all the projects in this study become green. The Matching Fund in GitHub Sponsors is not enough, it gives a maintainer at most just $5k in a year, which is not sufficient to raise the maintainer from the poverty threshold up to industry standard.

Source: André Staltz – Software below the poverty line

Unfortunately I’ve been talking about this for years now.

It’s time to make open source open but less free for the big users.

Simulation of the collision: The gas distribution is on the left, stars on the right. (RIT)

Simulation of the collision: The gas distribution is on the left, stars on the right. (RIT)