[…] In 2024, Bluesky grew from 2.89M users to 25.94M users. In addition to users hosted on Bluesky’s infrastructure, there are over 4,000 users running their own infrastructure (Personal Data Servers), self-hosting their content, posts, and data.

To meet the demands caused by user growth, we’ve increased our moderation team to roughly 100 moderators and continue to hire more staff. Some moderators specialize in particular policy areas, such as dedicated agents for child safety.

[…]

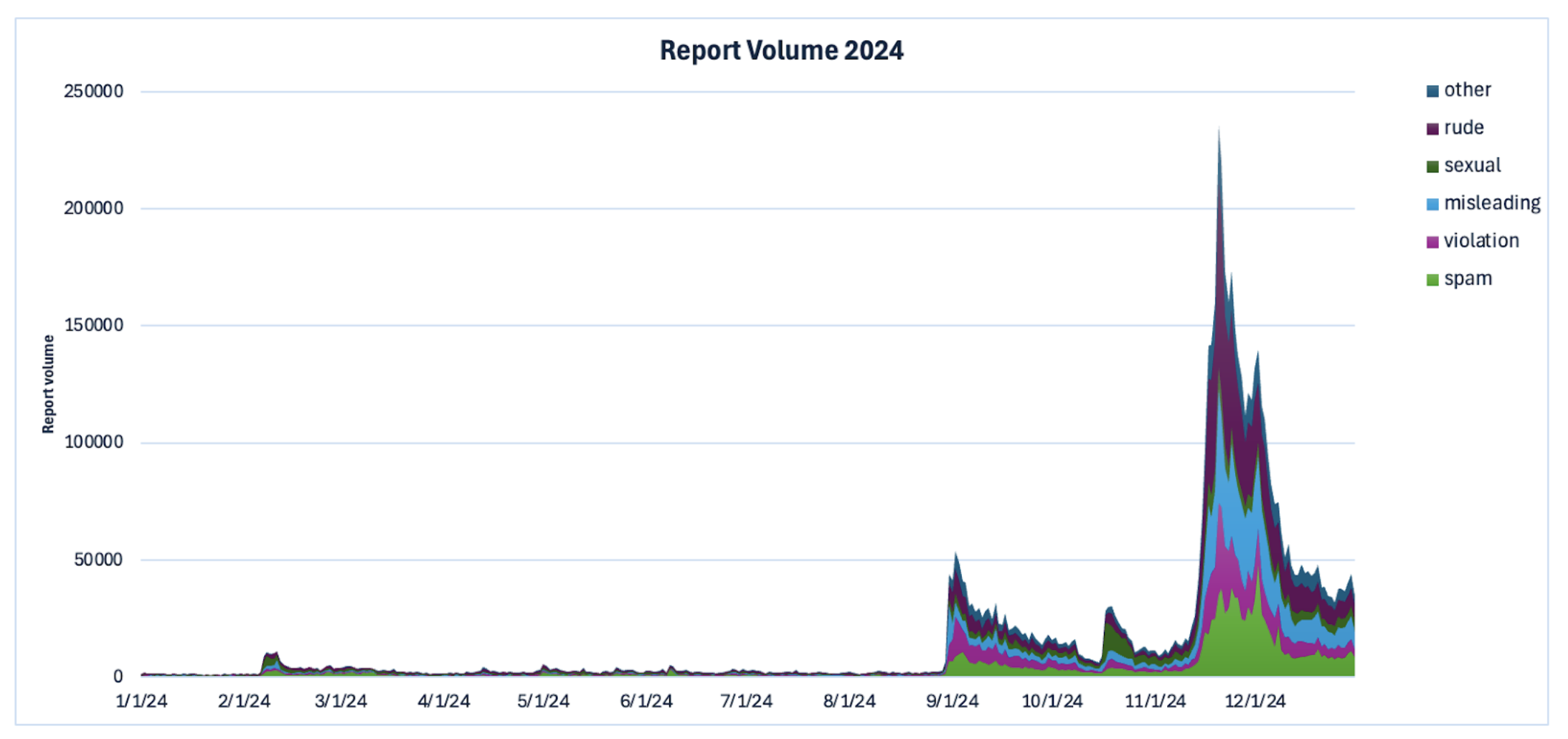

In 2024, users submitted 6.48M reports to Bluesky’s moderation service. That’s a 17x increase from the previous year — in 2023, users submitted 358K reports total. The volume of user reports increased with user growth and was non-linear, as the graph of report volume below shows:

Report volume in 2024In late August, there was a large increase in user growth for Bluesky from Brazil, and we saw spikes of up to 50k reports per day. Prior to this, our moderation team handled most reports within 40 minutes. For the first time in 2024, we now had a backlog in moderation reports. To address this, we increased the size of our Portuguese-language moderation team, added constant moderation sweeps and automated tooling for high-risk areas such as child safety, and hired moderators through an external contracting vendor for the first time.

Report volume in 2024In late August, there was a large increase in user growth for Bluesky from Brazil, and we saw spikes of up to 50k reports per day. Prior to this, our moderation team handled most reports within 40 minutes. For the first time in 2024, we now had a backlog in moderation reports. To address this, we increased the size of our Portuguese-language moderation team, added constant moderation sweeps and automated tooling for high-risk areas such as child safety, and hired moderators through an external contracting vendor for the first time.

We already had automated spam detection in place, and after this wave of growth in Brazil, we began investing in automating more categories of reports so that our moderation team would be able to review suspicious or problematic content rapidly. In December, we were able to review our first wave of automated reports for content categories like impersonation. This dropped processing time for high-certainty accounts to within seconds of receiving a report, though it also caused some false positives. We’re now exploring the expansion of this tooling to other policy areas. Even while instituting automation tooling to reduce our response time, human moderators are still kept in the loop — all appeals and false positives are reviewed by human moderators.

Some more statistics: The proportion of users submitting reports held fairly stable from 2023 to 2024. In 2023, 5.6% of our active users1 created one or more reports. In 2024, 1.19M users made one or more reports, approximately 4.57% of our user base.

In 2023, 3.4% of our active users received one or more reports. In 2024, the number of users who received a report were 770K, comprising 2.97% of our user base.

The majority of reports were of individual posts, with a total of 3.5M reports. This was followed by account profiles at 47K reports, typically for a violative profile picture or banner photo. Lists received 45K reports. DMs received 17.7K reports. Significantly lower are feeds at 5.3K reports, and starter packs with 1.9K reports.

Our users report content for a variety of reasons, and these reports help guide our focus areas. Below is a summary of the reports we received, categorized by the reasons users selected. The categories vary slightly depending on whether a report is about an account or a specific post, but here’s the full breakdown:

- Anti-social Behavior: Reports of harassment, trolling, or intolerance – 1.75M

- Misleading Content: Includes impersonation, misinformation, or false claims about identity or affiliations – 1.20M

- Spam: Excessive mentions, replies, or repetitive content – 1.40M

- Unwanted Sexual Content: Nudity or adult content not properly labeled – 630K

- Illegal or Urgent Issues: Clear violations of the law or our terms of service – 933K

- Other: Issues that don’t fit into the above categories – 726K

[…]

The top human-applied labels were:

- Sexual-figurative3 – 55,422

- Rude – 22,412

- Spam – 13,201

- Intolerant – 11,341

- Threat – 3,046

Appeals

In 2024, 93,076 users submitted at least one appeal in the app, for a total of 205K individual appeals. For most cases, the appeal was due to disagreement with label verdicts.

[…]

Legal Requests

In 2024, we received 238 requests from law enforcement, governments, legal firms, responded to 182, and complied with 146. The majority of requests came from German, U.S., Brazilian, and Japanese law enforcement.

[…]

Copyright / Trademark

In 2024, we received a total of 937 copyright and trademark cases. There were four confirmed copyright cases in the entire first half of 2024, and this number increased to 160 in September. The vast majority of cases occurred between September to December.

[…]

Source: Bluesky 2024 Moderation Report – Bluesky

The following lines are especially interesting: Brazilians seem to be the type of people who really enjoy reporting on people and not only that, they like to assault or brigade specific users.

In late August, there was a large increase in user growth for Bluesky from Brazil, and we saw spikes of up to 50k reports per day.

In 2023, 5.6% of our active users1 created one or more reports. In 2024, 1.19M users made one or more reports, approximately 4.57% of our user base.

In 2023, 3.4% of our active users received one or more reports. In 2024, the number of users who received a report were 770K, comprising 2.97% of our user base.

Report volume in 2024

Report volume in 2024