Boring 2D images can be transformed into corresponding 3D models and back into 2D again automatically by machine-learning-based software, boffins have demonstrated.

The code is known as a differentiable interpolation-based renderer (DIB-R), and was built by a group of eggheads led by Nvidia. It uses a trained neural network to take a flat image of an object as inputs, work out how it is shaped, colored and lit in 3D, and outputs a 2D rendering of that model.

This research could be useful in future for teaching robots and other computer systems how to work out how stuff is shaped and lit in real life from 2D still pictures or video frames, and how things appear to change depending on your view and lighting. That means future AI could perform better, particularly in terms of depth perception, in scenarios in which the lighting and positioning of things is wildly different from what’s expected.

Jun Gao, a graduate student at the University of Toronto in Canada and a part-time researcher at Nvidia, said: “This is essentially the first time ever that you can take just about any 2D image and predict relevant 3D properties.”

During inference, the pixels in each studied photograph are separated into two groups: foreground and background. The rough shape of the object is discerned from the foreground pixels to create a mesh of vertices.

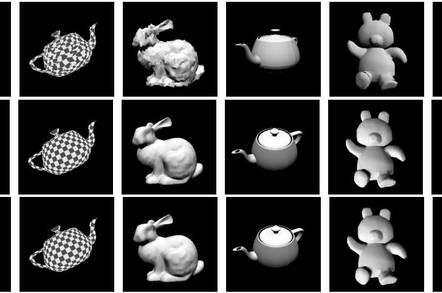

Next, a trained convolutional neural network (CNN) predicts the 3D position and lighting of each vertex in the mesh to form a 3D object model. This model is then rendered as a full-color 2D image using a suitable shader. This allows the boffins to compare the original 2D object to the rendered 2D object to see how well the neural network understood the lighting and shape of the thing.

You looking for an AI project? You love Lego? Look no further than this Reg reader’s machine-learning Lego sorter

During the training process, the CNN was shown stuff in 13 categories in the ShapeNet dataset. Each 3D model was rendered as 2D images viewed from 24 different angles to create a set of training images: these images were used to show the network how 2D images relate to 3D models.

Crucially, the CNN was schooled using an adversarial framework, in which the DIB-R outputs were passed through a discriminator network for analysis.

If a rendered object was similar enough to an input object, then DIB-R’s output passed the discriminator. If not, the output was rejected and the CNN had to generate ever more similar versions until it was accepted by the discriminator. Over time, the CNN learned to output realistic renderings. Further training is required to generate shapes outside of the training data, we note.

As we mentioned above, DIB-R could help robots better detect their environments, Nvidia’s Lauren Finkle said: “For an autonomous robot to interact safely and efficiently with its environment, it must be able to sense and understand its surroundings. DIB-R could potentially improve those depth perception capabilities.”

The research will be presented at the Conference on Neural Information Processing Systems in Vancouver, Canada, this week.

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft