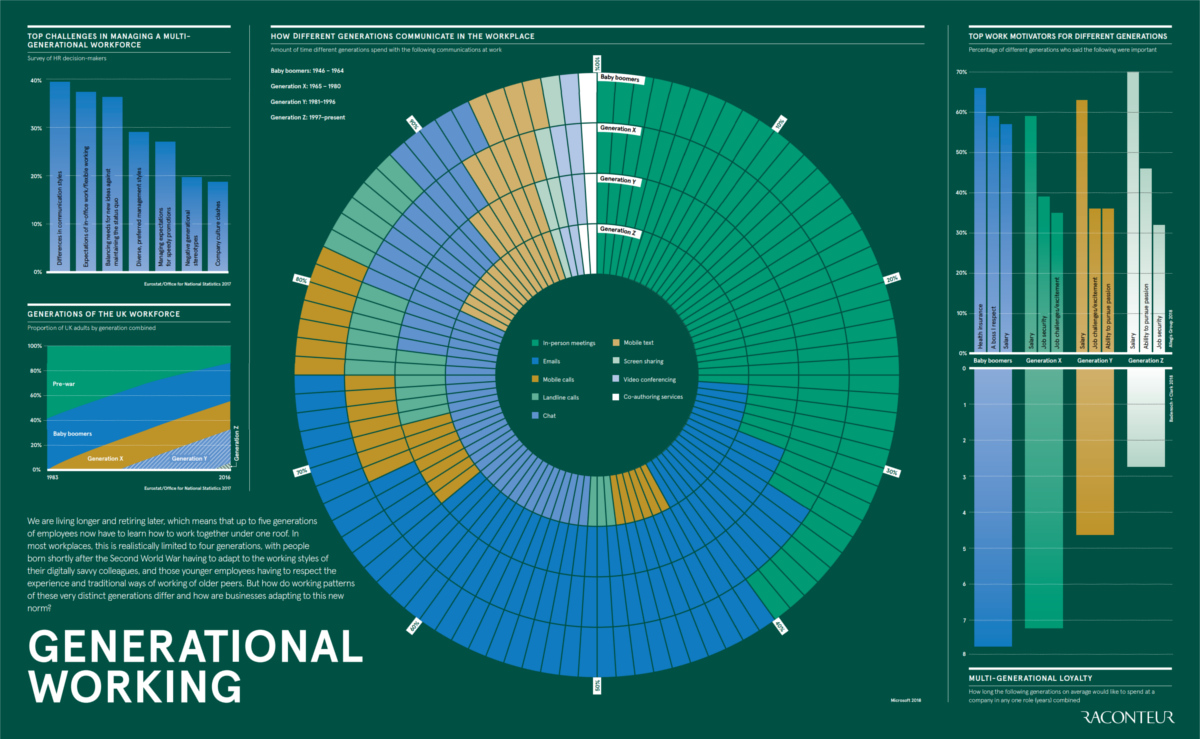

How Different Generations Approach Work

View the full-size version of the infographic by clicking here

The first representatives of Generation Z have started to trickle into the workplace – and like generations before them, they are bringing a different perspective to things.

Did you know that there are now up to five generations now working under any given roof, ranging all the way from the Silent Generation (born Pre-WWII) to the aforementioned Gen Z?

Let’s see how these generational groups differ in their approaches to communication, career priorities, and company loyalty.

Generational Differences at Work

Today’s infographic comes to us from Raconteur, and it breaks down some key differences in how generational groups are thinking about the workplace.

Let’s dive deeper into the data for each category.

Communication

How people prefer to communicate is one major and obvious difference that manifests itself between generations.

While many in older generations have dabbled in new technologies and trends around communications, it’s less likely that they will internalize those methods as habits. Meanwhile, for younger folks, these newer methods (chat, texting, etc.) are what they grew up with.

Top three communication methods by generation:

- Baby Boomers:

40% of communication is in person, 35% by email, and 13% by phone- Gen X:

34% of communication is in person, 34% by email, and 13% by phone- Millennials:

33% of communication is by email, 31% is in person, and 12% by chat- Gen Z:

31% of communication is by chat, 26% is in person, and 16% by emailsMotivators

Meanwhile, the generations are divided on what motivates them in the workplace. Boomers place health insurance as an important decision factor, while younger groups view salary and pursuing a passion as being key elements to a successful career.

Three most important work motivators by generation (in order):

- Baby Boomers:

Health insurance, a boss worthy of respect, and salary- Gen X:

Salary, job security, and job challenges/excitement- Millennials:

Salary, job challenges/excitement, and ability to pursue passion- Gen Z:

Salary, ability to pursue passion, and job securityLoyalty

Finally, generational groups have varying perspectives on how long they would be willing to stay in any one role.

- Baby Boomers: 8 years

- Gen X: 7 years

- Millennials: 5 years

- Gen Z: 3 years

Given the above differences, employers will have to think clearly about how to attract and retain talent across a wide scope of generations. Further, employers will have to learn what motivates each group, as well as what makes them each feel the most comfortable in the workplace.

Source: Infographic: How Different Generations Approach Work