One night in 2023, Eric was scrolling on a social media channel he regularly browsed for porn. Seconds into a video, he froze.

He realised the couple he was watching – entering the room, setting down their bags, and later, having sex – was himself and his girlfriend. Three weeks earlier, they had spent the night in a hotel in Shenzhen, southern China, unaware that they were not alone.

Their most intimate moments had been captured by a camera hidden in their hotel room, and the footage made available to thousands of strangers who had logged in to the channel Eric himself used to access pornography.

Eric (not his real name) was no longer just a consumer of China’s spy-cam porn industry, but a victim.

Warning: This story contains some offensive language

So-called spy-cam porn has existed in China for at least a decade, despite the fact that producing and distributing porn is illegal in the country.

[…]

Much of the material is advertised on the messaging and social media app Telegram. Over 18 months, I discovered six different websites and apps promoted on Telegram. Between them these claimed to operate more than 180 hotel-room spy-cams which were not just capturing, but livestreaming, hotel guests’ activities.

I monitored one of these websites regularly for seven months and found content captured by 54 different cameras, with about half operational at any one time.

That means thousands of guests could have been filmed over that period, the BBC estimates, based on typical occupancy rates. Most are unlikely to know they have been captured on camera.

Eric, from Hong Kong, began watching secretly filmed videos as a teenager, attracted by how “raw” the footage was.

“What drew me in is the fact that the people don’t know they’re being filmed,” says Eric, now in his 30s. “I think traditional porn feels very staged, very fake.”

But he experienced what it feels like to be at the opposite end of the supply chain when he found the video of himself and his girlfriend “Emily” – and he no longer finds gratification in this content.

[…]

Blue Li, from a Hong Kong-based NGO called RainLily – which helps victims remove explicit secretly-filmed footage from the internet – says demand is rising for her group’s services, but the task is proving more difficult.

Telegram never responds to RainLily’s requests for removal, she says, forcing them to contact group administrators – the very people selling or sharing spy-cam pornography – who have little incentive to respond.

“We believe tech companies share the huge responsibility in addressing these problems. Because these companies are not neutral platforms; their policies shape how the content would be spread,” Li says.

The BBC itself told Telegram, via its report function, that AKA and Brother Chun – and the groups they managed – were sharing spy-cam porn via its platforms, but it did not respond or take any action.

[…]

We formally set out our findings to Brother Chun and AKA that they were profiting from exploiting unsuspecting hotel guests. They did not reply, but hours later the Telegram accounts they used to advertise the content appeared to have been deleted. However the website that AKA sold me access to is still livestreaming hotel guests.

[…]

Source: We had sex in a Chinese hotel, then found we had been broadcast to thousands

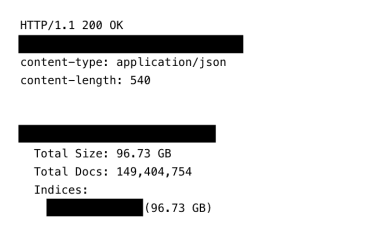

This screenshot shows the total count of records and size of the exposed infostealer database.

This screenshot shows the total count of records and size of the exposed infostealer database.