We’re releasing Universe, a software platform for measuring and training an AI’s general intelligence across the world’s supply of games, websites and other applications.

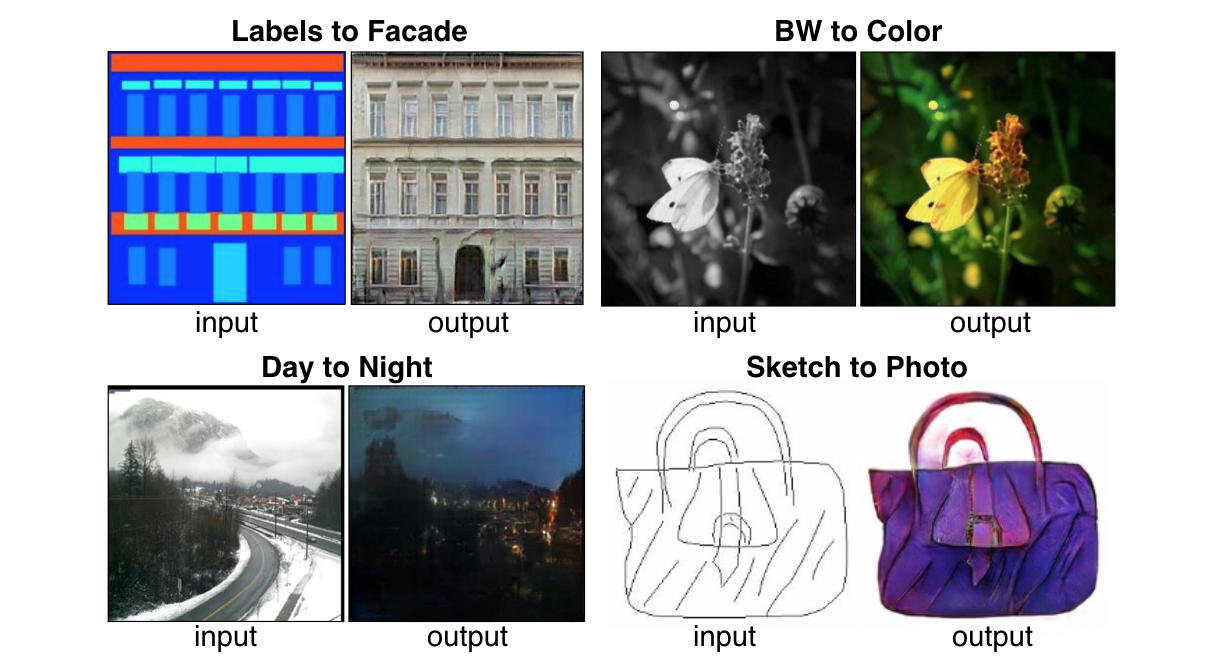

Universe allows an AI agent to use a computer like a human does: by looking at screen pixels and operating a virtual keyboard and mouse. We must train AI systems on the full range of tasks we expect them to solve, and Universe lets us train a single agent on any task a human can complete with a computer.

In April, we launched Gym, a toolkit for developing and comparing reinforcement learning (RL) algorithms. With Universe, any program can be turned into a Gym environment. Universe works by automatically launching the program behind a VNC remote desktop — it doesn’t need special access to program internals, source code, or bot APIs.

Source: Universe

The homepage

The Git repo

It uses OpenAI Gym for Reinforcement Learning

Reinforcement learning (RL) is the subfield of machine learning concerned with decision making and motor control. It studies how an agent can learn how to achieve goals in a complex, uncertain environment. It’s exciting for two reasons:

RL is very general, encompassing all problems that involve making a sequence of decisions: for example, controlling a robot’s motors so that it’s able to run and jump, making business decisions like pricing and inventory management, or playing video games and board games. RL can even be applied to supervised learning problems with sequential or structured outputs.

RL algorithms have started to achieve good results in many difficult environments. RL has a long history, but until recent advances in deep learning, it required lots of problem-specific engineering. DeepMind’s Atari results, BRETT from Pieter Abbeel’s group, and AlphaGo all used deep RL algorithms which did not make too many assumptions about their environment, and thus can be applied in other settings.

However, RL research is also slowed down by two factors:

The need for better benchmarks. In supervised learning, progress has been driven by large labeled datasets like ImageNet. In RL, the closest equivalent would be a large and diverse collection of environments. However, the existing open-source collections of RL environments don’t have enough variety, and they are often difficult to even set up and use.

Lack of standardization of environments used in publications. Subtle differences in the problem definition, such as the reward function or the set of actions, can drastically alter a task’s difficulty. This issue makes it difficult to reproduce published research and compare results from different papers.

OpenAI Gym is an attempt to fix both problems.

source

The Gym homepage

The Gym github page