Well, I will just repeat what I said about California (A new California law says all operating systems, including Linux, need to have some form of age verification at account setup) – NB people on this LinkedIn post were not too happy about that either:

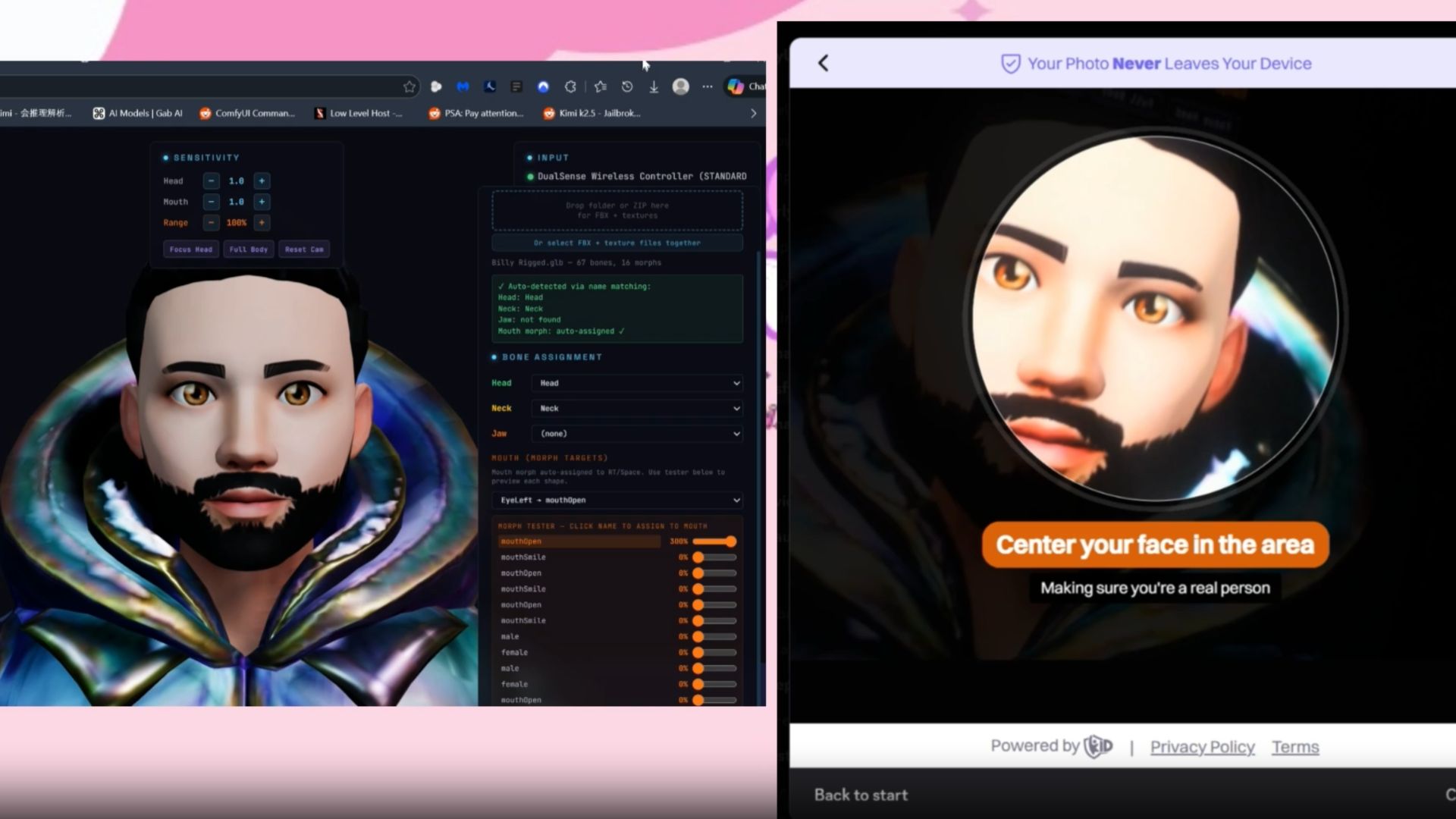

Here we see the creeping sliding scale that is the terror of Age Verification. First of all, an OS does not need an age – it’s like saying any technology needs age verification: all gadgets use an OS, whether it is your washing machine, your smart light switch, your PSP or your PC. Second of all, an OS has no business being forced online – they are supposed to be non-cloud, personal, non-connected unless you want them to connect. Age verification requires external suppliers and so you would need to connect to perform the age verification – and send who knows what kind of other personal data. Eg Windows sends hardware data (along with a whole load of other data) that works much like a fingerprint. This makes it easy to track a users movements online. This is one reason why people want to bypass the online account creation on Windows and use local accounts.

For more on the horrors of age verification, see https://www.linkielist.com/?s=age+verification&submit=Search

As more US states consider online age-verification requirements, two Colorado lawmakers want to implement the age checks at the operating system-level, after California enacted a similar law.

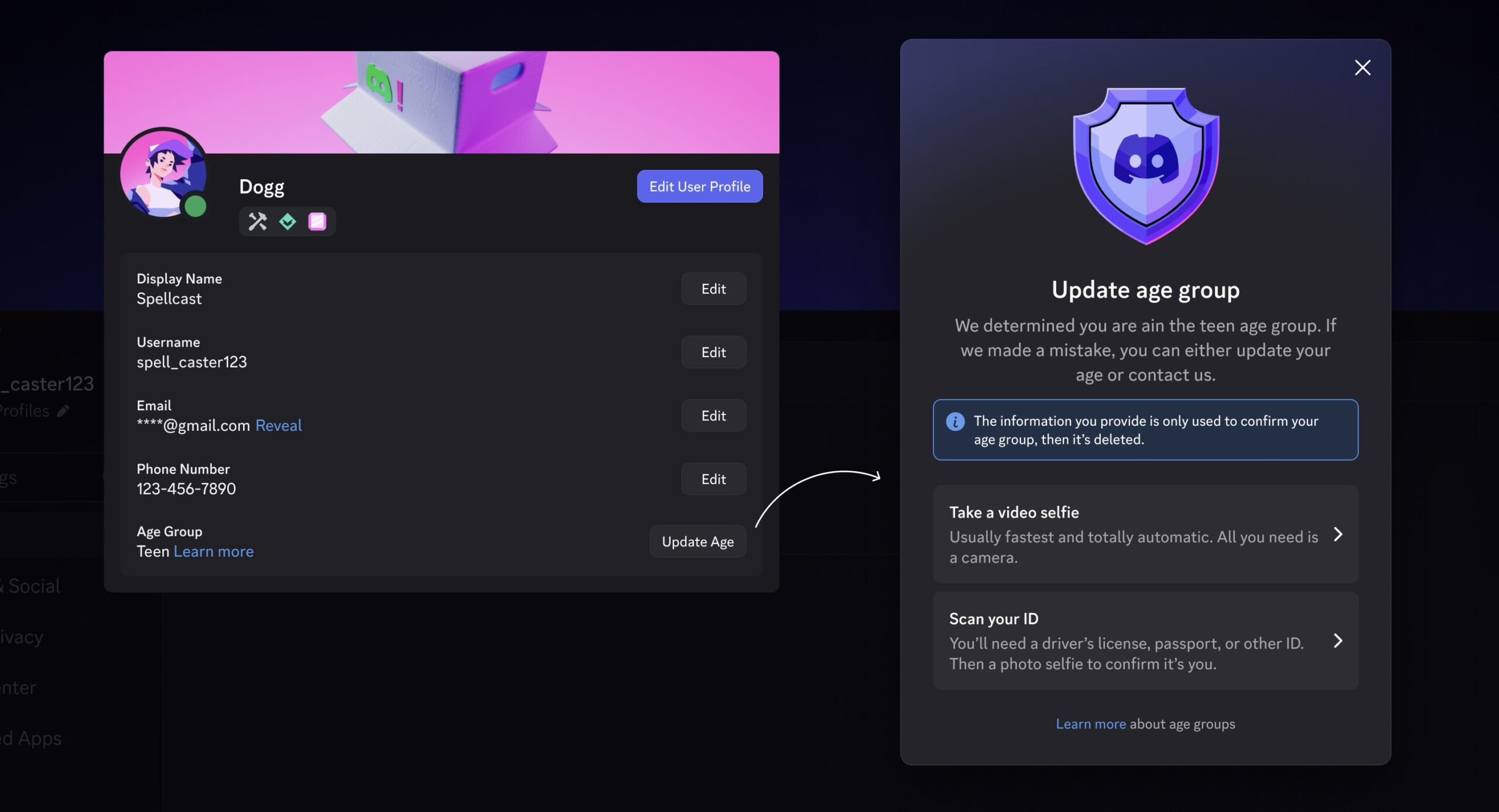

Colorado’s SB26-051, introduced last month, would require operating systems to register the owner’s age, which third-party apps can then leverage to determine if the user is an adult. The bill calls for the device owner to register their birthdate or age, but for the purposes of creating an “age bracket,” which can then be shared to an app developer through an API to learn their age range, according to BiometricUpdate.com.

The bill comes from state Sen. Matt Ball and Rep. Amy Paschal, both Democrats. “The intent is to create thoughtful safeguards for kids online through a privacy-forward framework for age assurance,” Ball told PCMag. “Unlike some laws in other states, SB 51 doesn’t require users to share personally identifiable information or use facial recognition technology.”

Ball also said the legislation was based on California’s bill AB 1043, which was passed last year. It too requires OS makers to create a way for the device owner to register their age bracket, which can then be shared to app developers over an API. The California law starts to take effect January 1, 2027.

Ball added: “SB 26-51 is very closely modeled on it. One of the reasons for bringing SB 51 was that the tech and software industry is already complying with AB 1043, so there’s minimal added burden.”

Note here: they are not. Several Linux distributions have in fact already changed their ToU making it illegal for the software to be used in California.

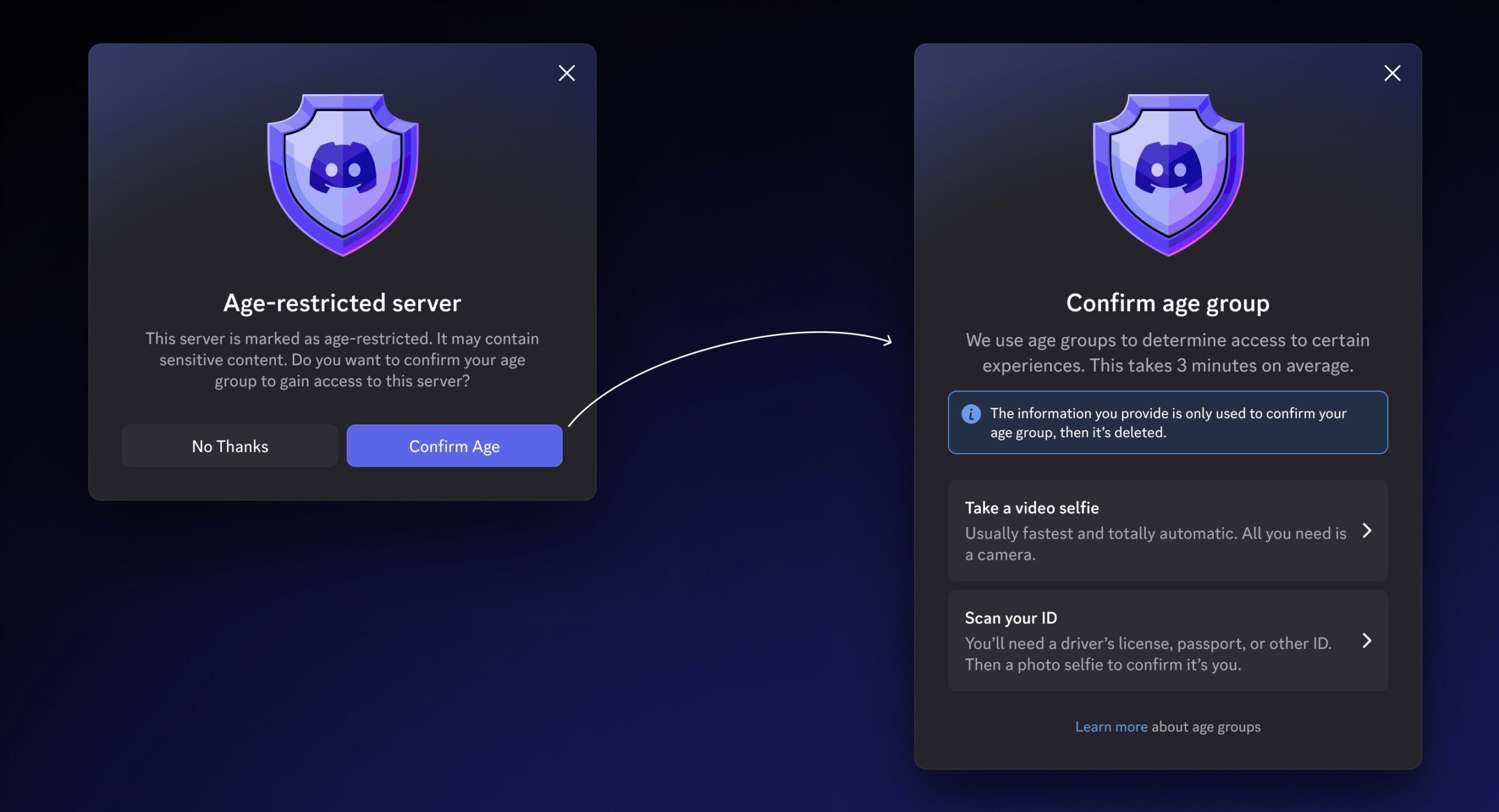

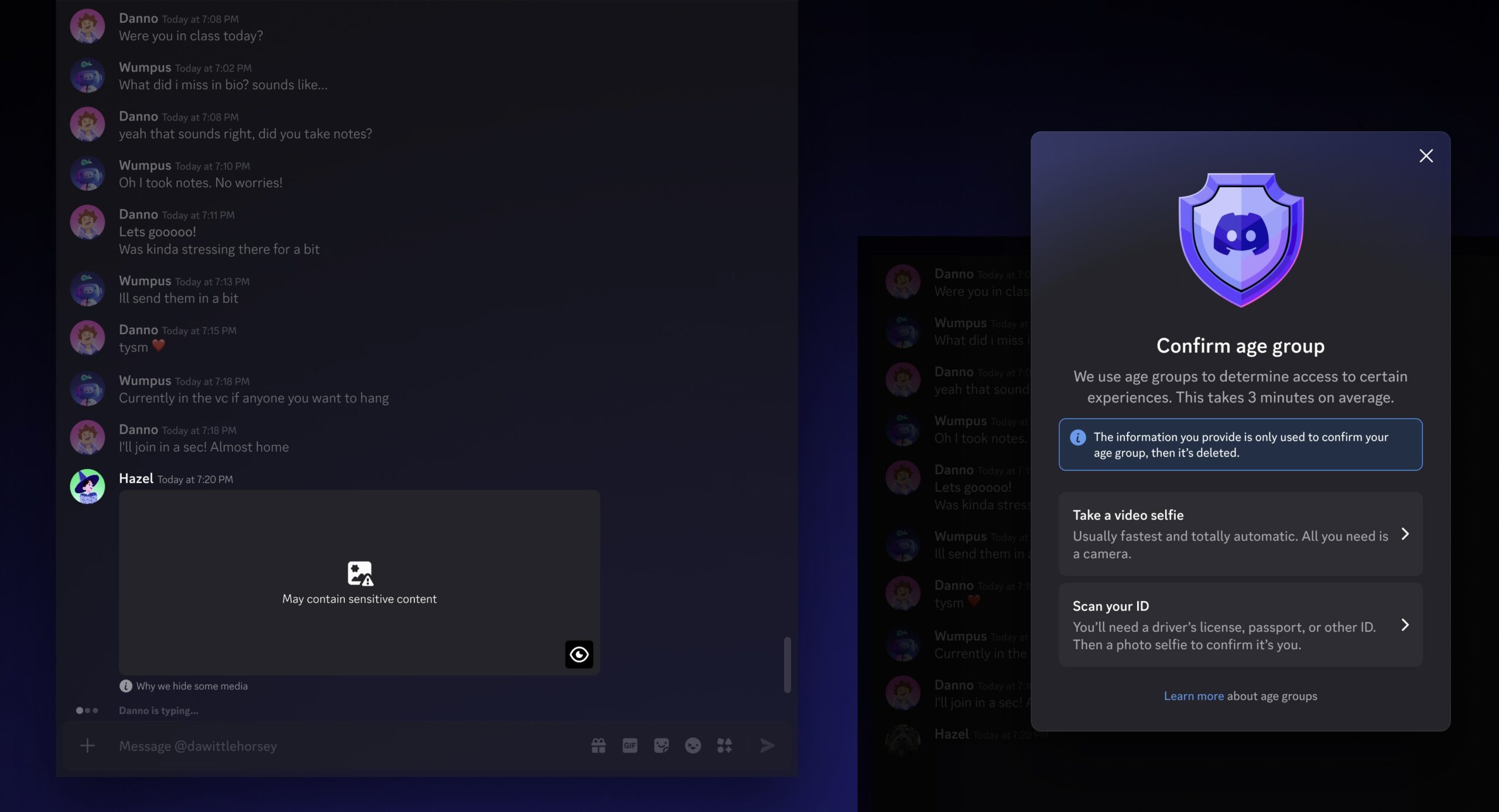

The legislation also promises to centralize the age check through the OS, rather than mandating that each app enforce their own age-verification mechanism, which can involve scanning the user’s official ID, thus raising privacy and security concerns. The bill also forbids the sharing of the age-bracket data for any other purpose.

But it looks like it’s easy to bypass the age check proposed by SB26-051. The legislation itself doesn’t mention any state ID check to verify the owner’s age. In addition, the bill doesn’t seem to cover websites, only apps and app stores.

Source: Colorado Lawmakers Push for Age Verification at the Operating System Level | PCMag