When the nonprofit Freedom House recently published its annual report, it noted that 2025 marked the 15th straight year of decline for global internet freedom. The biggest decline, after Georgia and Germany, came within the United States.

Among the culprits cited in the report: age verification laws, dozens of which have come into effect over the last year. “Online anonymity, an essential enabler for freedom of expression, is entering a period of crisis as policymakers in free and autocratic countries alike mandate the use of identity verification technology for certain websites or platforms, motivated in some cases by the legitimate aim of protecting children,” the report warns.

Age verification laws are, in some ways, part of a years-long reckoning over child safety online, as tech companies have shown themselves unable to prevent serious harms to their most vulnerable users. Lawmakers, who have failed to pass data privacy regulations, Section 230 reform or any other meaningful legislation that would thoughtfully reimagine what responsibilities tech companies owe their users, have instead turned to the blunt tool of age-based restrictions — and with much greater success.

Over the last two years, 25 states have passed laws requiring some kind of age verification to access adult content online. This year, the Supreme Court delivered a major victory to backers of age verification standards when it upheld a Texas law requiring sites hosting adult content to check the ages of their users.

Age checks have also expanded to social media and online platforms more broadly. Sixteen states now have laws requiring parental controls or other age-based restrictions for social media services. (Six of these measures are currently in limbo due to court challenges.) A federal bill to ban kids younger than 13 from social media has gained bipartisan support in Congress. Utah, Texas and Louisiana passed laws requiring app stores to check the ages of their users, all of which are set to go into effect next year. California plans to enact age-based rules for app stores in 2027.

These laws have started to fragment the internet. Smaller platforms and websites that don’t have the resources to pay for third-party verification services may have no choice but to exit markets where age checks are required. Blogging service Dreamwidth pulled out of Mississippi after its age verification laws went into effect, saying that the $10,000 per user fines it could face were an “existential threat” to the company. Bluesky also opted to go dark in Mississippi rather than comply. (The service has complied with age verification laws in South Dakota and Wyoming, as well as the UK.) Pornhub, which has called existing age verification laws “haphazard and dangerous,” has blocked access in 23 states.

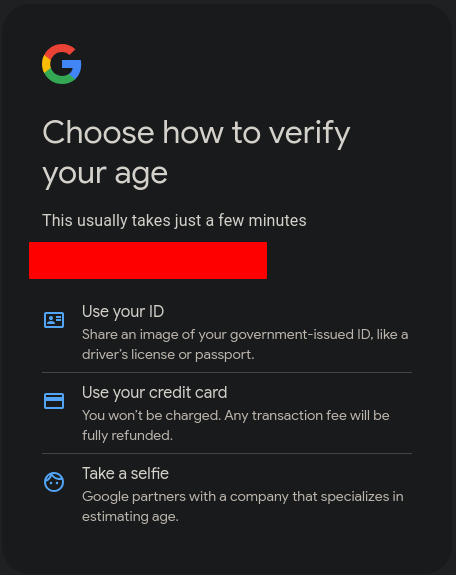

Pornhub is not an outlier in its assessment. Privacy advocates have long warned that age verification laws put everyone’s privacy at risk. Practically, there’s no way to limit age verification standards only to minors. Confirming the ages of everyone under 18 means you have to confirm the ages of everyone. In practice, this often means submitting a government-issued ID or allowing an app to scan your face. Both are problematic and we don’t need to look far to see how these methods can go wrong.

Discord recently revealed that around 70,000 users “may” have had their government IDs leaked due to an “incident” involving a third-party vendor the company contracts with to provide customer service related to age verification. Last year, another third-party identity provider that had worked with TikTok, Uber and other services exposed drivers’ licenses. As a growing number of platforms require us to hand over an ID, these kinds of incidents will likely become even more common.

Similar risks exist for face scans. Because most minors don’t have official IDs, platforms often rely on AI-based tools that can guess users’ ages. A face scan may seem more private than handing over a social security number, but we could be turning over far more information than we realize, according to experts at the Electronic Frontier Foundation (EFF).

“When we submit to a face scan to estimate our age, a less scrupulous company could flip a switch and use the same face scan, plus a slightly different algorithm, to guess our name or other demographics,” the organization notes. “A poorly designed system might store this personal data, and even correlate it to the online content that we look at. In the hands of an adversary, and cross-referenced to other readily available information, this information can expose intimate details about us.”

These issues aren’t limited to the United States. Australia, Denmark and Malaysia have taken steps to ban younger teens from social media entirely. Officials in France are pushing for a similar ban, as well as a “curfew” for older teens. These measures would also necessitate some form of age verification in order to block the intended users. In the UK, where the Online Safety Act went into effect earlier this year, we’ve already seen how well-intentioned efforts to protect teens from supposedly harmful content can end up making large swaths of the internet more difficult to access.

The law is ostensibly meant to “prevent young people from encountering harmful content relating to suicide, self-harm, eating disorders and pornography,” according to the BBC. But the law has also resulted in age checks that reach far beyond porn sites. Age verification is required to access music on Spotify. It will soon be required for Xbox accounts. On X, videos of protests have been blocked. Redditors have reported being blocked from a lengthy number of subreddits that are marked NSFW but don’t actually host porn, including those related to menstruation, news and addiction recovery. Wikipedia, which recently lost a challenge to be excluded from the law’s strictest requirements, is facing the prospect of being forced to verify the ages of its UK contributors, which the organization has said could have disastrous consequences.

The UK law has also shown how ineffective existing age verification methods are. Users have been able to circumvent the checks by using selfies of video game characters, AI-generated images of ID documents and, of course, Virtual Private Networks (VPNs).

As the EFF notes, VPNs are incredibly widely used. The software allows people to browse the internet while masking their actual location. They’re used by activists and students and people who want to get around geoblocks built into streaming services. Many universities and businesses (including Engadget parent company Yahoo) require their students and workers to use VPNs in order to access certain information. Blocking VPNs would have serious repercussions for all of these groups.

The makers of several popular VPN services reported major spikes in the UK following the Online Safety Act going into effect this summer, with ProtonVPN reporting a 1,400 percent surge in sign-ups. That’s also led to fears of a renewed crackdown on VPNs. Ofcom, the regulator tasked with enforcing the law, told TechRadar it was “monitoring” VPN usage, which has further fueled speculation it could try to ban or restrict their use. And here in the States, lawmakers in Wisconsin have proposed an age verification law that would require sites that host “harmful” content to also block VPNs.

While restrictions on VPNs are, for now, mostly theoretical, the fact that such measures are even being considered is alarming. Up to now, VPN bans are more closely associated with authoritarian countries without an open internet, like Russia and China. If we continue down a path of trying to put age gates up around every piece of potentially objectionable content, the internet could get a lot worse for everyone.