The FBI routinely misused a database, gathered by the NSA with the specific purpose of searching for foreign intelligence threats, by searching it for everything from vetting to spying on relatives.

In doing so, it not only violated the law and the US constitution but knowingly lied to the faces of congressmen who were asking the intelligence services about this exact issue at government hearings, hearings that were intended to find if there needed to be additional safeguards added to the program.

That is the upshot of newly declassified rulings of the secret FISC court that decides issues of spying and surveillance within the United States.

On Tuesday, in a year-old ruling [PDF] that remains heavily redacted, everything that both privacy advocates and a number of congressmen – particularly Senator Ron Wyden (D-OR) – feared was true of the program turned out to be so, but worse.

Even though the program in question – Section 702 – is specifically designed only to be used for US government agencies to be allowed to search for evidence of foreign intelligence threats, the FBI gave itself carte blanche to search the same database for US citizens by stringing together a series of ridiculous legal justifications about data being captured “incidentally” and subsequent queries of that data not requiring a warrant because it had already been gathered.

Despite that situation, the FBI repeatedly assured lawmakers and the courts that it was using its powers in a very limited way. Senator Wyden was not convinced and used his position to ask questions about the program, the answers to which raised ever greater concerns.

For example, while the NSA was able to outline the process by which its staff was allowed to make searches on the database, including who was authorized to dig further, and it was able to give a precise figure for how many searches there had been, the FBI claimed it was literally not able to do so.

Free for all

Any FBI agent was allowed to search the database, it revealed under questioning, any FBI agent was allowed to de-anonymize the data and the FBI claimed it did not have a system to measure the number of search requests its agents carried out.

In a year-long standoff between Senator Wyden and the Director of National Intelligence, the government told Congress it was not able to get a number for the number of US citizens whose details had been brought up in searches – something that likely broke the Fourth Amendment.

Today’s release of the FISC secret opinion reveals that giving the FBI virtually unrestricted access to the database led to exactly the sort of behavior that people were concerned about: vast number of searches, including many that were not remotely justified.

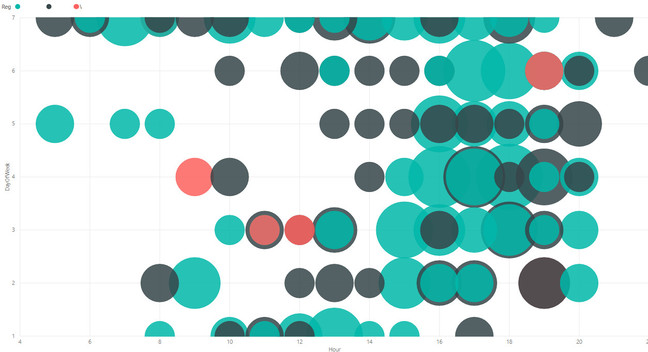

For example, the DNI told Congress that in 2016, the NSA had carried out 30,355 searches on US persons within the database’s metadata and 2,280 searches on the database’s content. The CIA had carried out 2,352 search on content for US persons in the same 12-month period. The FBI said it had no way to measure it the number of searches it ran.

But that, it turns out, was a bold-faced lie. Because we now know that the FBI carried out 6,800 queries of the database in a single day in December 2017 using social security numbers. In other words, the FBI was using the NSA’s database at least 80 times more frequently than the NSA itself.

The FBI’s use of the database – which, again, is specifically defined in law as only being allowed to be used for foreign intelligence matters – was completely routine. And a result, agents started using it all the time for anything connected to their work, and sometimes their personal lives.

In the secret court opinion, now made public (but, again, still heavily redacted), the government was forced to concede that there were “fundamental misunderstandings” within the FBI staff over what criteria they needed to meet before carrying out a search.

Article continues on the site