Last December, a whopping 3 terabytes of unprotected data from the Oklahoma Securities Commission was uncovered by Greg Pollock, a researcher with cybersecurity firm UpGuard. It amounted to millions of files, many on sensitive FBI investigations, all of which were left wide open on a server with no password, accessible to anyone with an internet connection, Forbes can reveal.

“It represents a compromise of the entire integrity of the Oklahoma department of securities’ network,” said Chris Vickery, head of research at UpGuard, which is revealing its technical findings on Wednesday. “It affects an entire state level agency. … It’s massively noteworthy.”

A breach back to the ’80s

The Oklahoma department regulates all financial securities business happening in the state. It may be little surprise there was leaked information on FBI cases. But the amount and variety of data astonished Vickery and Pollock.

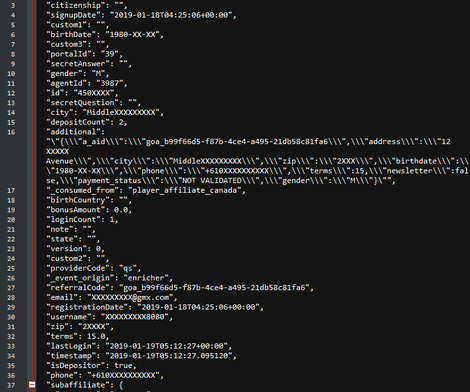

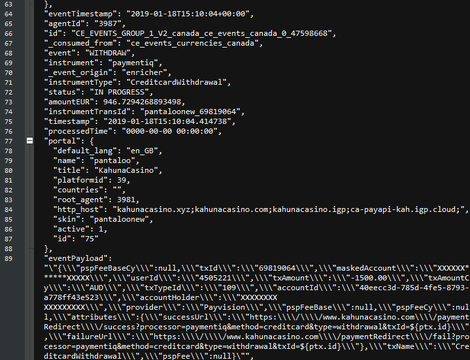

Vickery said the FBI files contained “all sorts of archive enforcement actions” dating back seven years (the earliest file creation date was 2012). The documents included spreadsheets with agent-filled timelines of interviews related to investigations, emails from parties involved in myriad cases and bank transaction histories. There were also copies of letters from subjects, witnesses and other parties involved in FBI investigations.

[…]

Just as concerning, the leak also included email archives stretching back 17 years, thousands of social security numbers and data from the 1980s onwards.

[…]

After Vickery and Pollock disclosed the breach, they informed the commission it had mistakenly left open what’s known as an rsync server. Such servers are typically used to back up large batches of data and, if that information is supposed to be secure, should be protected by a username and password.

There were other signs of poor security within the leaked data. For instance, passwords for computers on the Oklahoma government’s network were also revealed. They were “not complicated,” quipped Chris Vickery, head of research on the UpGuard team. In one of the more absurd choices made by the department, it had stored an encrypted version of one document in the same file folder as a decrypted version. Passwords for remote access to agency computers were also leaked.

This is the latest in a series of incidents involving rsync servers. In December, UpGuard revealed that Level One Robotics, a car manufacturing supply chain company, was exposing information in the same way as the Oklahoma government division. Companies with data exposed in that event included Volkswagen, Chrysler, Ford, Toyota, General Motors and Tesla.

For whatever reason, governments and corporate giants alike still aren’t aware how easy it is for hackers to constantly scan the Web for such leaks. Starting with basics like passwords would help them keep their secrets secure.

Source: Massive Oklahoma Government Data Leak Exposes 7 Years of FBI Investigations