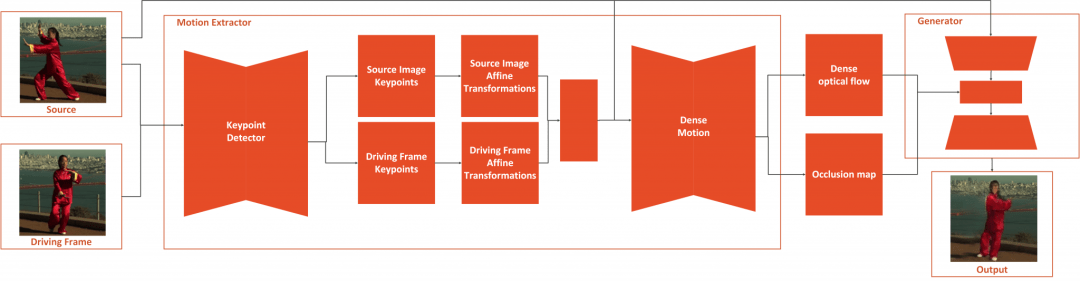

et’s explore a bit how this method works. The whole process is separated into two parts: Motion Extraction and Generation. As an input the source image and driving video are used. Motion extractor utilizes autoencoder to detect keypoints and extracts first-order motion representation that consists of sparse keypoints and local affine transformations. These, along with the driving video are used to generate dense optical flow and occlusion map with the dense motion network. Then the outputs of dense motion network and the source image are used by the generator to render the target image.

This work outperforms state of the art on all the benchmarks. Apart from that it has features that other models just don’t have. The really cool thing is that it works on different categories of images, meaning you can apply it to face, body, cartoon, etc. This opens up a lot of possibilities. Another revolutionary thing with this approach is that now you can create good quality Deepfakes with a single image of the target object, just like we use YOLO for object detection.

Building your own Deepfake

As mention, we can use already trained models and use our source image and driving video to generate deepfakes. You can do so by following this Collab notebook.

In essence, what you need to do is clone the repository and mount your Google Drive. Once that is done, you need to upload your image and driving video to drive. Make sure that image and video size contains only face, for the best results. Use ffmpeg to crop the video if you need to. Then all you need is to run this piece of code:

source_image = imageio.imread('/content/gdrive/My Drive/first-order-motion-model/source_image.png') driving_video = imageio.mimread('driving_video.mp4', memtest=False) #Resize image and video to 256x256 source_image = resize(source_image, (256, 256))[..., :3] driving_video = [resize(frame, (256, 256))[..., :3] for frame in driving_video] predictions = make_animation(source_image, driving_video, generator, kp_detector, relative=True, adapt_movement_scale=True) HTML(display(source_image, driving_video, predictions).to_html5_video())Here is my experiment with image of Nikola Tesla and a video of myself:

Conclusion

We are living in a weird age in a weird world. It is easier to create fake videos/news than ever and distribute them. It is getting harder and harder to understand what is truth and what is not. It seems that nowadays we can not trust our own senses anymore. Even though fake video detectors are also created, it is just a matter of time before the information gap is too small and even the best fake detectors can not detect if the video is true or not. So, in the end, one piece of advice – be skeptical. Take every information that you get with a bit of suspicion because things might not be quite as it seems.

Thank you for reading!

Source: Create Deepfakes in 5 Minutes with First Order Model Method

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft