Analysis IBM boasts that machine learning is not just quicker on its POWER servers than on TensorFlow in the Google Cloud, it’s 46 times quicker.

Back in February Google software engineer Andreas Sterbenz wrote about using Google Cloud Machine Learning and TensorFlow on click prediction for large-scale advertising and recommendation scenarios.

He trained a model to predict display ad clicks on Criteo Labs clicks logs, which are over 1TB in size and contain feature values and click feedback from millions of display ads.

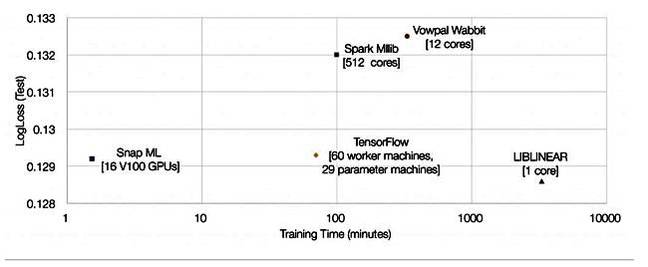

Data pre-processing (60 minutes) was followed by the actual learning, using 60 worker machines and 29 parameter machines for training. The model took 70 minutes to train, with an evaluation loss of 0.1293. We understand this is a rough indicator of result accuracy.

Sterbenz then used different modelling techniques to get better results, reducing the evaluation loss, which all took longer, eventually using a deep neural network with three epochs (a measure of the number of times all of the training vectors are used once to update the weights), which took 78 hours.

[…]

Thomas Parnell and Celestine Dünner at IBM Research in Zurich used the same source data – Criteo Terabyte Click Logs, with 4.2 billion training examples and 1 million features – and the same ML model, logistic regression, but a different ML library. It’s called Snap Machine Learning.

They ran their session using Snap ML running on four Power System AC922 servers, meaning eight POWER9 CPUs and 16 Nvidia Tesla V100 GPUs. Instead of taking 70 minutes, it completed in 91.5 seconds, 46 times faster.

They prepared a chart showing their Snap ML, the Google TensorFlow and three other results:

A 46x speed improvement over TensorFlow is not to be sneezed at. What did they attribute it to?

They say Snap ML features several hierarchical levels of parallelism to partition the workload among different nodes in a cluster, takes advantage of accelerator units, and exploits multi-core parallelism on the individual compute units

- First, data is distributed across the individual worker nodes in the cluster

- On a node data is split between the host CPU and the accelerating GPUs with CPUs and GPUs operating in parallel

- Data is sent to the multiple cores in a GPU and the CPU workload is multi-threaded

Snap ML has nested hierarchical algorithmic features to take advantage of these three levels of parallelism.

Source: IBM claims its machine learning library is 46x faster than TensorFlow • The Register

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft