Robots normally need to be programmed in order to get them to perform a particular task, but they can be coaxed into writing the instructions themselves with the help of machine learning, according to research published in Science.

Engineers at Vicarious AI, a robotics startup based in California, USA, have built what they call a “visual cognitive computer” (VCC), a software platform connected to a camera system and a robot gripper. Given a set of visual clues, the VCC writes a short program of instructions to be followed by the robot so it knows how to move its gripper to do simple tasks.

“Humans are good at inferring the concepts conveyed in a pair of images and then applying them in a completely different setting,” the paper states.

“The human-inferred concepts are at a sufficiently high level to be effortlessly applied in situations that look very different, a capacity so natural that it is used by IKEA and LEGO to make language-independent assembly instructions.”

Don’t get your hopes up, however, these robots can’t put your flat-pack table or chair together for you quite yet. But it can do very basic jobs, like moving a block backwards and forwards.

It works like this. First, an input and output image are given to the system. The input image is a jumble of colored objects of various shapes and sizes, and the output image is an ordered arrangement of the objects. For example, the input image could be a number of red blocks and the output image is all the red blocks ordered to form a circle. Think of it a bit like a before and after image.

The VCC works out what commands need to be performed by the robot in order to organise the range of objects before it, based on the ‘before’ to the ‘after’ image. The system is trained to learn what action corresponds to what command using supervised learning.

Dileep George, cofounder of Vicarious, explained to The Register, “up to ten pairs [of images are used] for training, and ten pairs for testing. Most concepts are learned with only about five examples.”

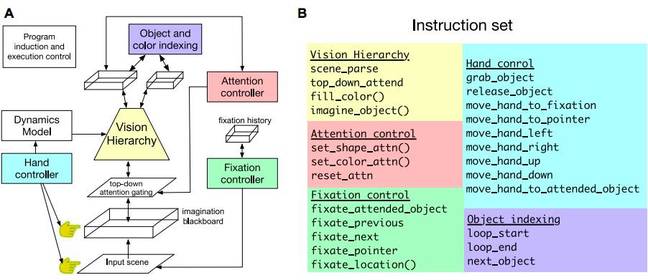

Here’s a diagram of how it works:

A: A graph describing the robot’s components. B: The list of commands the VCC can use. Image credit: Vicarious AI

The left hand side is a schematic of all the different parts that control the robot. The visual hierarchy looks at the objects in front of the camera and categorizes them by object shape and colour. The attention controller decides what objects to focus on, whilst the fixation controller directs the robot’s gaze to the objects before the hand controller operates the robot’s arms to move the objects about.

The robot doesn’t need too many training examples to work because there are only 24 commands, listed on the right hand of the diagram, for the VCC controller.

Source: Watch an AI robot program itself to, er, pick things up and push them around • The Register

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft