It’s pretty much the way of the world: beyond the basic enshittification story that has been so well told over the past year or so about how companies get worse and worse as they get more and more powerful, there’s also the well known concept of successful innovative companies “pulling up the ladder” behind them, using the regulatory process to make it impossible for other companies to follow their own path to success. We’ve talked about this in the sense of political entrepreneurship, which is when the main entrepreneurial effort is not to innovate in newer and better products for customers, but rather using the political system for personal gain and to prevent competitors from havng the same opportunities.

It happens all too frequently. And it’s been happening lately with the big internet companies, which relied on the open internet to become successful, but under massive pressure from regulators (and the media), keep shooting the open internet in the back, each time they can present themselves as “supportive” of some dumb regulatory regime. Facebook did it six years ago by supporting FOSTA wholeheartedly, which was the key tide shift that made the law viable in Congress.

And, now, it appears that Google is going down that same path. There have been hints here and there, such as when it mostly gave up the fight on net neutrality six years ago. However, Google had still appeared to be active in various fights to protect an open internet.

But, last week, Google took a big step towards pulling up the open internet ladder behind it, which got almost no coverage (and what coverage it got was misleading). And, for the life of me, I don’t understand why it chose to do this now. It’s one of the dumbest policy moves I’ve seen Google make in ages, and seems like a complete unforced error.

Last Monday, Google announced “a policy framework to protect children and teens online,” which was echoed by subsidiary YouTube, which posted basically the same thing, talking about it’s “principled approach for children and teenagers.” Both of these pushed not just a “principled approach” for companies to take, but a legislative model (and I hear that they’re out pushing “model bills” across legislatures as well).

The “legislative” model is, effectively, California’s Age Appropriate Design Code. Yes, the very law that was just declared unconstitutional just a few weeks before Google basically threw its weight behind the approach. What’s funny is that many, many people have (incorrectly) believed that Google was some sort of legal mastermind behind the NetChoice lawsuits challenging California’s law and other similar laws, when the reality appears to be that Google knows full well that it can handle the requirements of the law, but smaller competitors cannot. Google likes the law. It wants more of them, apparently.

The model includes “age assurance” (which is effectively age verification, though everyone pretends it’s not), greater parental surveillance, and the compliance nightmare of “impact assessments” (we talked about this nonsense in relation to the California law). Again, for many companies this is a good idea. But just because something is a good idea for companies to do does not mean that it should be mandated by law.

But that’s exactly what Google is pushing for here, even as a law that more or less mimics its framework was just found to be unconstitutional. While cynical people will say that maybe Google is supporting these policies hoping that they will continue to be found unconstitutional, I see little evidence to support that. Instead, it really sounds like Google is fully onboard with these kinds of duty of care regulations that will harm smaller competitors, but which Google can handle just fine.

It’s pulling up the ladder behind it.

And yet, the press coverage of this focused on the fact that this was being presented as an “alternative” to a full on ban for kids under 18 to be on social media. The Verge framed this as “Google asks Congress not to ban teens from social media,” leaving out that it was Google asking Congress to basically make it impossible for any site other than the largest, richest companies to be able to allow teens on social media. Same thing with TechCrunch, which framed it as Google lobbying against age verification.

But… it’s not? It’s basically lobbying for age verification, just in the guise of “age assurance,” which is effectively “age verification, but if you’re a smaller company you can get it wrong some undefined amount of time, until someone sues you.” I mean, what’s here is not “lobbying against age verification,” it’s basically saying “here’s how to require age verification.”

A good understanding of user age can help online services offer age-appropriate experiences. That said, any method to determine the age of users across services comes with tradeoffs, such as intruding on privacy interests, requiring more data collection and use, or restricting adult users’ access to important information and services. Where required, age assurance – which can range from declaration to inference and verification – should be risk-based, preserving users’ access to information and services, and respecting their privacy. Where legislation mandates age assurance, it should do so through a workable, interoperable standard that preserves the potential for anonymous or pseudonymous experiences. It should avoid requiring collection or processing of additional personal information, treating all users like children, or impinging on the ability of adults to access information. More data-intrusive methods (such as verification with “hard identifiers” like government IDs) should be limited to high-risk services (e.g., alcohol, gambling, or pornography) or age correction. Moreover, age assurance requirements should permit online services to explore and adapt to improved technological approaches. In particular, requirements should enable new, privacy-protective ways to ensure users are at least the required age before engaging in certain activities. Finally, because age assurance technologies are novel, imperfect, and evolving, requirements should provide reasonable protection from liability for good-faith efforts to develop and implement improved solutions in this space.

Much like Facebook caving on FOSTA, this is Google caving on age verification and other “duty of care” approaches to regulating the way kids have access to the internet. It’s pulling up the ladder behind itself, knowing that it was able to grow without having to take these steps, and making sure that none of the up-and-coming challenges to Google’s position will have the same freedom to do so.

And, for what? So that Google can go to regulators and say “look, we’re not against regulations, here’s our framework”? But Google has smart policy people. They have to know how this plays out in reality. Just as with FOSTA, it completely backfired on Facebook (and the open internet). This approach will do the same.

Not only will these laws inevitably be used against the companies themselves, they’ll also be weaponized and modified by policymakers who will make them even worse and even more dangerous, all while pointing to Google’s “blessing” of this approach as an endorsement.

For years, Google had been somewhat unique in continuing to fight for the open internet long after many other companies were switching over to ladder pulling. There were hints that Google was going down this path in the past, but with this policy framework, the company has now made it clear that it has no intention of being a friend to the open internet any more.

Well, with chrome only support, dns over https and browser privacy sandboxing, Google has been off the do no evil for some time and has been closing off the openness of the web by rebuilding or crushing competition for quite some time

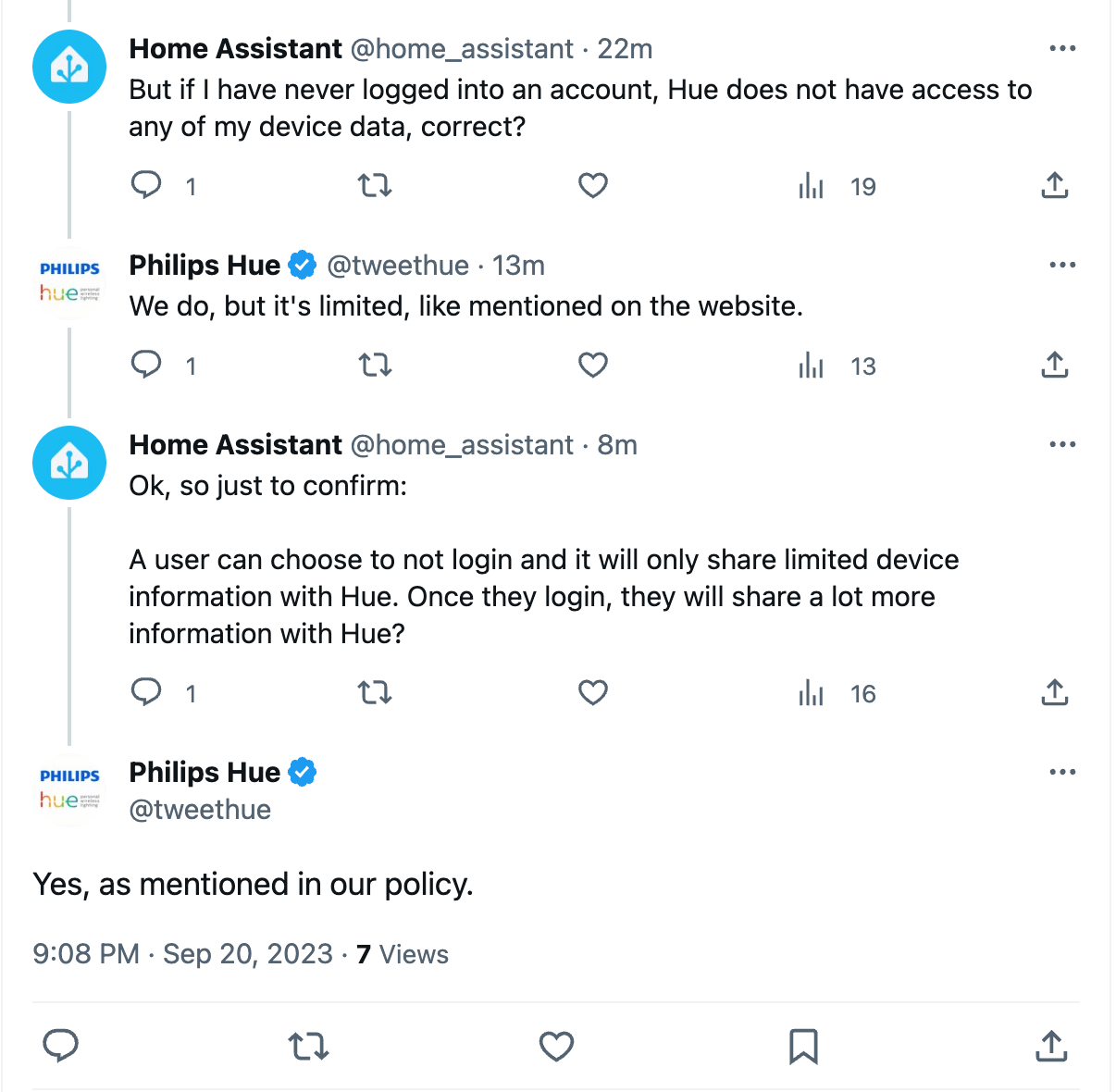

Twitter conversation with Philips Hue (source:

Twitter conversation with Philips Hue (source: