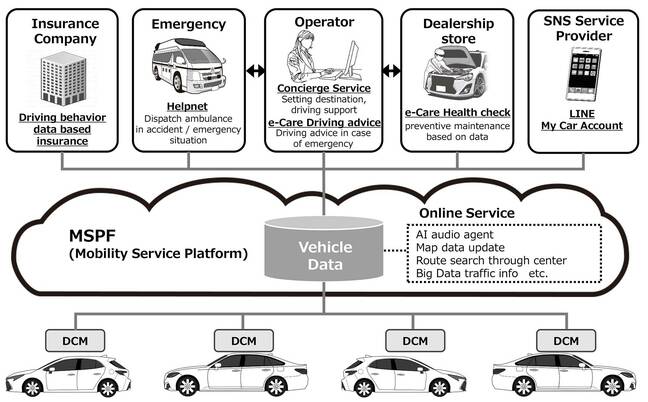

A threat intelligence firm called HYAS, a private company that tries to prevent or investigates hacks against its clients, is buying location data harvested from ordinary apps installed on peoples’ phones around the world, and using it to unmask hackers. The company is a business, not a law enforcement agency, and claims to be able to track people to their “doorstep.”

The news highlights the complex supply chain and sale of location data, traveling from apps whose users are in some cases unaware that the software is selling their location, through to data brokers, and finally to end clients who use the data itself. The news also shows that while some location firms repeatedly reassure the public that their data is focused on the high level, aggregated, pseudonymous tracking of groups of people, some companies do buy and use location data from a largely unregulated market explicitly for the purpose of identifying specific individuals.

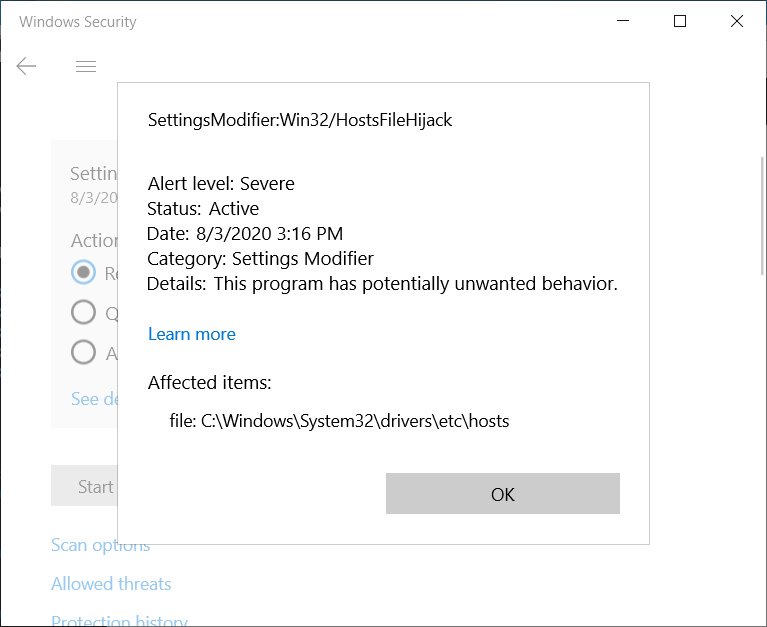

HYAS’ location data comes from X-Mode, a company that started with an app named “Drunk Mode,” designed to prevent college students from making drunk phone calls and has since pivoted to selling user data from a wide swath of apps. Apps that mention X-Mode in their privacy policies include Perfect365, a beauty app, and other innocuous looking apps such as an MP3 file converter.

“As a TI [threat intelligence] tool it’s incredible, but ethically it stinks,” a source in the threat intelligence industry who received a demo of HYAS’ product told Motherboard. Motherboard granted the source anonymity as they weren’t authorized by their company to speak to the press.

[…]

HYAS differs in that it provides a concrete example of a company deliberately sourcing mobile phone location data with the intention of identifying and pinpointing particular people and providing that service to its own clients. Independently of Motherboard, the office of Senator Ron Wyden, which has been investigating the location data market, also discovered HYAS was using mobile location data. A Wyden aide said they had spoken with HYAS about the use of the data. HYAS said the mobile location data is used to unmask people who may be using a Virtual Private Network (VPN) to hide their identity, according to the Wyden aide.

In a webinar uploaded to HYAS’ website, Todd Thiemann, VP of marketing at the company, describes how HYAS used location data to track a suspected hacker.

“We found out it was the city of Abuja, and on a city block in an apartment building that you can see down there below,” he says during the webinar. “We found the command and control domain used for the compromised employees, and used this threat actor’s login into the registrar, along with our geolocation granular mobile data to confirm right down to his house. We also got his first and last name, and verified his cellphone with a Nigerian mobile operator.”

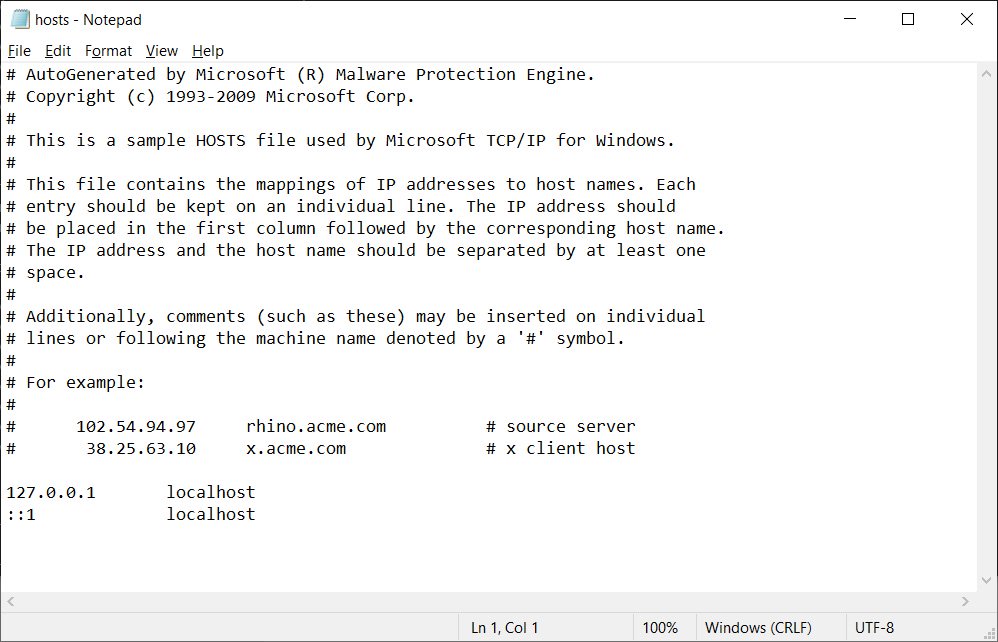

A screenshot of a webinar given by HYAS, in which the company explains how it has used mobile application location data.

On its website, HYAS claims to have some Fortune 25 companies, large tech firms, as well as law enforcement and intelligence agencies as clients.

[…]

Customers can include banks who want to get a heads-up on whether a freshly dumped cache of stolen credit card data belongs to them; a retailer trying to protect themselves from hackers; or a business checking if any of their employees’ login details are being traded by cybercriminals.

Some threat intelligence companies also sell services to government agencies, including the FBI, DHS, and Secret Service. The Department of Justice oftens acknowledges the work of particular threat intelligence companies in the department’s announcement of charges or indictments against hackers and other types of criminals.

But some other members of the threat intelligence industry criticized HYAS’ use of mobile app location data. The CEO of another threat intelligence firm told Motherboard that their company does not use the same sort of information that HYAS does.

The threat intelligence source who originally alerted Motherboard to HYAS recalled “being super shook at how they collected it,” referring to the location data.

A senior employee of a third threat intelligence firm said that location data is not hard to buy.

[…]

Motherboard found several location data companies that list HYAS in their privacy policies. One of those is X-Mode, a company that plants its own code into ordinary smartphone apps to then harvest location information. An X-Mode spokesperson told Motherboard in an email that the company’s data collecting code, or software development kit (SDK), is in over 400 apps and gathers information on 60 million global monthly users on average. X-Mode also develops some of its own apps which use location data, including parental monitoring app PlanC and fitness tracker Burn App.

“Whatever your need, the XDK Visualizer is here to show you that our signature SDK is too legit to quit (literally, it’s always on),” the description for another of X-Code’s own apps, which visualizes the company’s data collection to attract clients, reads.

“They’re like many location trackers but seem more aggressive to be honest,” Will Strafach, founder of the app Guardian, which alerts users to other apps accessing their location data, told Motherboard in an online chat. In January, X-Mode acquired the assets of Location Sciences, another location firm, expanding X-Mode’s dataset.

[…]

Motherboard then identified a number of apps whose own privacy policies mention X-Mode. They included Perfect365, a beauty-focused app that people can use to virtually try on different types of makeup with their device’s camera.

[…]

Various government agencies have bought access to location data from other companies. Last month, Motherboard found that U.S. Customs and Border Protection (CBP) paid $476,000 to a firm that sells phone location data. CBP has used the data to scan parts of the U.S. border, and the Internal Revenue Service (IRS) tried to use the same data to track criminal suspects but was unsuccessful.