If you look at the current climate, the largest companies are the ones that hook you into their channel, whether it is a game, a website, shopping or social media. Quite a lot of research has been done in to how much time we spend watching TV and looking at our mobiles, showing differing numbers, all of which are surprisingly high. The New York Post says Americans check their phones 80 times per day, The Daily Mail says 110 times, Inc has a study from Qualtrics and Accel with 150 times and Business Insider has people touching their phones 2617 times per day.

This is nurtured behaviour and there is quite a bit of study on how they do this exactly

Social Networking Sites and Addiction: Ten Lessons Learned (academic paper)

Online social networking sites (SNSs) have gained increasing popularity in the last decade, with individuals engaging in SNSs to connect with others who share similar interests. The perceived need to be online may result in compulsive use of SNSs, which in extreme cases may result in symptoms and consequences traditionally associated with substance-related addictions. In order to present new insights into online social networking and addiction, in this paper, 10 lessons learned concerning online social networking sites and addiction based on the insights derived from recent empirical research will be presented. These are: (i) social networking and social media use are not the same; (ii) social networking is eclectic; (iii) social networking is a way of being; (iv) individuals can become addicted to using social networking sites; (v) Facebook addiction is only one example of SNS addiction; (vi) fear of missing out (FOMO) may be part of SNS addiction; (vii) smartphone addiction may be part of SNS addiction; (viii) nomophobia may be part of SNS addiction; (ix) there are sociodemographic differences in SNS addiction; and (x) there are methodological problems with research to date. These are discussed in turn. Recommendations for research and clinical applications are provided.

Hooked: How to Build Habit-Forming Products (Book)

Why do some products capture widespread attention while others flop? What makes us engage with certain products out of sheer habit? Is there a pattern underlying how technologies hook us?

Nir Eyal answers these questions (and many more) by explaining the Hook Model—a four-step process embedded into the products of many successful companies to subtly encourage customer behavior. Through consecutive “hook cycles,” these products reach their ultimate goal of bringing users back again and again without depending on costly advertising or aggressive messaging.

7 Ways Facebook Keeps You Addicted (and how to apply the lessons to your products) (article)

One of the key reasons for why it is so addictive is “operant conditioning”. It is based upon the scientific principle of variable rewards, discovered by B. F. Skinner (an early exponent of the school of behaviourism) in the 1930’s when performing experiments with rats.

The secret?

Not rewarding all actions but only randomly.

Most of our emails are boring business emails and occasionally we find an enticing email that keeps us coming back for more. That’s variable reward.

That’s one way Facebook creates addiction

The Secret Ways Social Media Is Built for Addiction

On February 9, 2009, Facebook introduced the Like button. Initially, the button was an innocent thing. It had nothing to do with hijacking the social reward systems of a user’s brain.

“The main intention I had was to make positivity the path of least resistance,” explains Justin Rosenstein, one of the four Facebook designers behind the button. “And I think it succeeded in its goals, but it also created large unintended negative side effects. In a way, it was too successful.”

Today, most of us reach for Snapchat, Instagram, Facebook, or Twitter with one vague hope in mind: maybe someone liked my stuff. And it’s this craving for validation, experienced by billions around the globe, that’s currently pushing platform engagement in ways that in 2009 were unimaginable. But more than that, it’s driving profits to levels that were previously impossible.

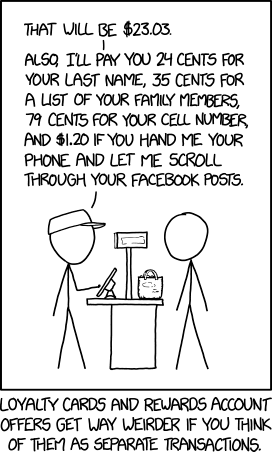

“The attention economy” is a relatively new term. It describes the supply and demand of a person’s attention, which is the commodity traded on the internet. The business model is simple: the more attention a platform can pull, the more effective its advertising space becomes, allowing it to charge advertisers more.

Behavioral Game Design (article)

Every computer game is designed around the same central element: the player. While the hardware and software for games may change, the psychology underlying how players learn and react to the game is a constant. The study of the mind has actually come up with quite a few findings that can inform game design, but most of these have been published in scientific journals and other esoteric formats inaccessible to designers. Ironically, many of these discoveries used simple computer games as tools to explore how people learn and act under different conditions.

The techniques that I’ll discuss in this article generally fall under the heading of behavioral psychology. Best known for the work done on animals in the field, behavioral psychology focuses on experiments and observable actions. One hallmark of behavioral research is that most of the major experimental discoveries are species-independent and can be found in anything from birds to fish to humans. What behavioral psychologists look for (and what will be our focus here) are general “rules” for learning and for how minds respond to their environment. Because of the species- and context-free nature of these rules, they can easily be applied to novel domains such as computer game design. Unlike game theory, which stresses how a player should react to a situation, this article will focus on how they really do react to certain stereotypical conditions.

What is being offered here is not a blueprint for perfect games, it is a primer to some of the basic ways people react to different patterns of rewards. Every computer game is implicitly asking its players to react in certain ways. Psychology can offer a framework and a vocabulary for understanding what we are already telling our players.

5 Creepy Ways Video Games Are Trying to Get You Addicted (article)

The Slot Machine in Your Pocket (brilliant article!)

When we get sucked into our smartphones or distracted, we think it’s just an accident and our responsibility. But it’s not. It’s also because smartphones and apps hijack our innate psychological biases and vulnerabilities.

I learned about our minds’ vulnerabilities when I was a magician. Magicians start by looking for blind spots, vulnerabilities and biases of people’s minds, so they can influence what people do without them even realizing it. Once you know how to push people’s buttons, you can play them like a piano. And this is exactly what technology does to your mind. App designers play your psychological vulnerabilities in the race to grab your attention.

I want to show you how they do it, and offer hope that we have an opportunity to demand a different future from technology companies.

If you’re an app, how do you keep people hooked? Turn yourself into a slot machine.

There is also a backlash to this movement.

How Technology is Hijacking Your Mind — from a Magician and Google Design Ethicist

I’m an expert on how technology hijacks our psychological vulnerabilities. That’s why I spent the last three years as a Design Ethicist at Google caring about how to design things in a way that defends a billion people’s minds from getting hijacked.

Humantech.com

Technology is hijacking our minds and society.

Our world-class team of deeply concerned former tech insiders and CEOs intimately understands the culture, business incentives, design techniques, and organizational structures driving how technology hijacks our minds.

Since 2013, we’ve raised awareness of the problem within tech companies and for millions of people through broad media attention, convened top industry executives, and advised political leaders. Building on this start, we are advancing thoughtful solutions to change the system.

Why is this problem so urgent?

Technology that tears apart our common reality and truth, constantly shreds our attention, or causes us to feel isolated makes it impossible to solve the world’s other pressing problems like climate change, poverty, and polarization.

No one wants technology like that. Which means we’re all actually on the same team: Team Humanity, to realign technology with humanity’s best interests.

What is Time Well Spent (Part I): Design Distinctions

With Time Well Spent, we want technology that cares about helping us spend our time, and our lives, well – not seducing us into the most screen time, always-on interruptions or distractions.

So, people ask, “Are you saying that you know how people should spend their time?” Of course not. Let’s first establish what Time Well Spent isn’t:

It is not a universal, normative view of how people should spend their time

It is not saying that screen time is bad, or that we should turn it all off.

It is not saying that specific categories of apps (like social media or games) are bad.