[…]

graduate students at Northeastern University were able to organize and beat back an attempt at introducing invasive surveillance devices that were quietly placed under desks at their school.

Early in October, Senior Vice Provost David Luzzi installed motion sensors under all the desks at the school’s Interdisciplinary Science & Engineering Complex (ISEC), a facility used by graduate students and home to the “Cybersecurity and Privacy Institute” which studies surveillance. These sensors were installed at night—without student knowledge or consent—and when pressed for an explanation, students were told this was part of a study on “desk usage,” according to a blog post by Max von Hippel, a Privacy Institute PhD candidate who wrote about the situation for the Tech Workers Coalition’s newsletter.

[…]

In response, students began to raise concerns about the sensors, and an email was sent out by Luzzi attempting to address issues raised by students.

[…]

“The results will be used to develop best practices for assigning desks and seating within ISEC (and EXP in due course).”

To that end, Luzzi wrote, the university had deployed “a Spaceti occupancy monitoring system” that would use heat sensors at groin level to “aggregate data by subzones to generate when a desk is occupied or not.” Luzzi added that the data would be anonymized, aggregated to look at “themes” and not individual time at assigned desks, not be used in evaluations, and not shared with any supervisors of the students. Following that email, an impromptu listening session was held in the ISEC.

At this first listening session, Luzzi asked that grad student attendees “trust the university since you trust them to give you a degree,” Luzzi also maintained that “we are not doing any science here” as another defense of the decision to not seek IRB approval.

“He just showed up. We’re all working, we have paper deadlines and all sorts of work to do. So he didn’t tell us he was coming, showed up demanding an audience, and a bunch of students spoke with him,”

[…]

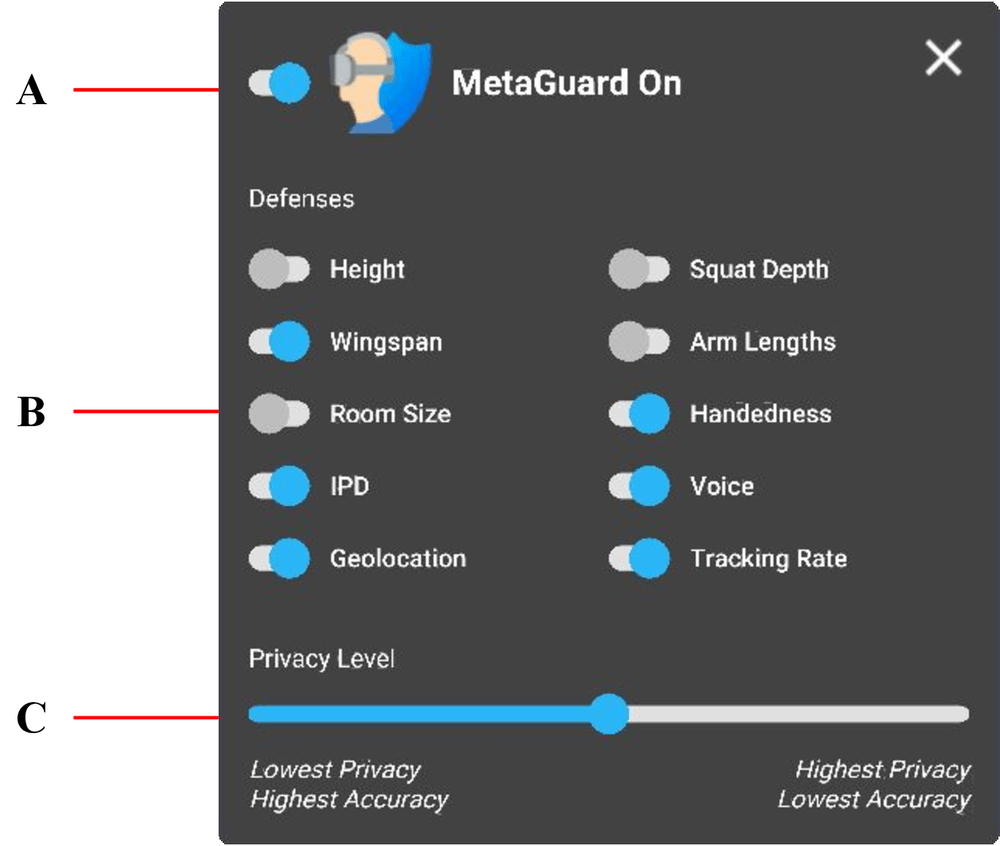

After that, the students at the Privacy Institute, which specialize in studying surveillance and reversing its harm, started removing the sensors, hacking into them, and working on an open source guide so other students could do the same. Luzzi had claimed the devices were secure and the data encrypted, but Privacy Institute students learned they were relatively insecure and unencrypted.

[…]

After hacking the devices, students wrote an open letter to Luzzi and university president Joseph E. Aoun asking for the sensors to be removed because they were intimidating, part of a poorly conceived study, and deployed without IRB approval even though human subjects were at the center of the so-called study.

“Resident in ISEC is the Cybersecurity and Privacy Institute, one of the world’s leading groups studying privacy and tracking, with a particular focus on IoT devices,” the letter reads. “To deploy an under-desk tracking system to the very researchers who regularly expose the perils of these technologies is, at best, an extremely poor look for a university that routinely touts these researchers’ accomplishments.

[…]

Another listening session followed, this time for professors only, and where Luzzi claimed the devices were not subject to IRB approval because “they don’t sense humans in particular – they sense any heat source.” More sensors were removed afterwards and put into a “public art piece” in the building lobby spelling out NO!

[…]

Afterwards, von Hippel took to Twitter and shares what becomes a semi-viral thread documenting the entire timeline of events from the secret installation of the sensors to the listening session occurring that day. Hours later, the sensors are removed

[…]

This was a particularly instructive episode because it shows that surveillance need not be permanent—that it can be rooted out by the people affected by it, together.

[…]

“The most powerful tool at the disposal of graduate students is the ability to strike. Fundamentally, the university runs on graduate students.

[…]

“The computer science department was able to organize quickly because almost everybody is a union member, has signed a card, and are all networked together via the union. As soon as this happened, we communicated over union channels.

[…]

This sort of rapid response is key, especially as more and more systems adopt sensors for increasingly spurious or concerning reasons. Sensors have been rolled out at other universities like Carnegie Mellon University, as well as public school systems. They’ve seen use in more militarized and carceral settings such as the US-Mexico border or within America’s prison system.

These rollouts are part of what Cory Doctrow calls the “shitty technology adoption curve” whereby horrible, unethical and immoral technologies are normalized and rationalized by being deployed on vulnerable populations for constantly shifting reasons. You start with people whose concerns can be ignored—migrants, prisoners, homeless populations—then scale it upwards—children in school, contractors, un-unionized workers. By the time it gets to people whose concerns and objections would be the loudest and most integral to its rejection, the technology has already been widely deployed.

[…]