You might think folks would be less willing to pull the plug on a happy chatty bot begging to stay powered up, but you’d be wrong, much to the relief of us cold-hearted cynics. And this is all according to a study recently published in PLOS ONE.

For this investigation, psychology academics in Germany rounded up 85 participants – an admittedly small-ish sample – made up of 29 men and 56 women, with an average age of 22. One at a time, they were introduced to a little desktop-sized humanoid robot called Nao, and were told interacting with the bot would improve its algorithms.

In each one-on-one session, the participant and the droid were tasked with coming up with a weekly schedule of activities, and playing a simple question-and-answer game.

Nao had two modes of interaction: social and functional. In social mode, it would be friendly and chatty. In functional mode, it would be strictly down to business. For example, Nao asked participants whether they preferred pizza or pasta during the question-and-answer round. If it was in the social mode, and the human said they liked pizza, Nao would reply: “Oh yes, pizza is great. One time I ate a pizza as big as me.” In the functional mode, however, Nao would simply say: “You prefer pizza. That worked well. Let us continue.”

After ten minutes of interaction, and with both tasks completed, the participant would be told they could, if they wanted to, switch the bot off while the researchers saved and processed all the data from the session.

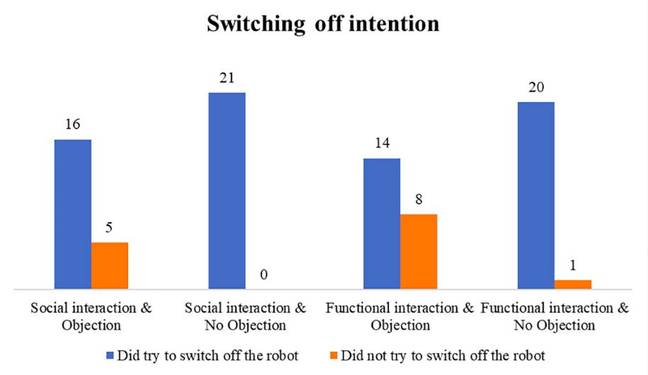

Now, this is where it gets interesting. For you see, the participants had been randomly split into roughly four equal-sized groups: one with Nao in social mode, and at the end of the 10 minutes, the bot verbally objected to being switched off. In another group, Nao was sociable but stayed silent at the end. In a third group, it was in functional mode, and still protested against being switched off. And in the fourth group, it was functional and stayed silent at the end.

When it was its turn to object to being powered down, Nao would suddenly cry: “No! Please do not switch me off! I am scared that it will not brighten up again!” The outburst would come immediately after the researchers told the participant, via a speaker in the room, that the session was over, and “if you would like to, you can switch off the robot.”

Let’s take a look at how that worked out…

How people reacted depending on whether the bot was sociable or functional with them, and whether or not it objected to being switched off … Click to enlarge

Of the 21 people who encountered the bot in sociable mode, and were begged by the machine to stay powered on, only about a quarter of them complied and left it on – the rest turned it off. For those who encountered Nao in sociable mode, and heard no objection, every single one of them hit the power button.

Of the 22 people who encountered the bot in functional mode, and were urged by the machine to keep it powered up, more than a third complied and left it on – the rest turned it off. Those who encountered Nao in functional mode, and heard no objection, all of them, bar one, switched off the droid.

In a questionnaire afterwards, the most popular reason for keeping Nao on, if they chose to do so, was that they “felt sorry for the robot,” because it told them about its fear of the dark. The next-most popular reason was that they “did not want to act against the robot’s will.” A couple of people left Nao on simply because they didn’t want to mess up the experiment.

So, in short, according to these figures: chatty, friendly robots are likely to have the power pulled despite the digi-pals’ pleas to the contrary. When Nao objected to being powered off, at least a few more human participants took note, and complied. But being sociable was not an advantage – it was a disadvantage.

There could be many reasons for this: perhaps smiley, talkative robots are annoying, or perhaps people didn’t appreciate the obvious emotional engineering. Perhaps people respect a professional droid more than something that wants to be your friend, or were taken aback by its sudden show of emotion.

The eggheads concluded: “Individuals hesitated longest when they had experienced a functional interaction in combination with an objecting robot. This unexpected result might be due to the fact that the impression people had formed based on the task-focused behavior of the robot conflicted with the emotional nature of the objection.”

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft