Balls of human brain cells linked to a computer have been used to perform a very basic form of speech recognition. The hope is that such systems will use far less energy for AI tasks than silicon chips.

“This is just proof-of-concept to show we can do the job,” says Feng Guo at Indiana University Bloomington. “We do have a long way to go.”

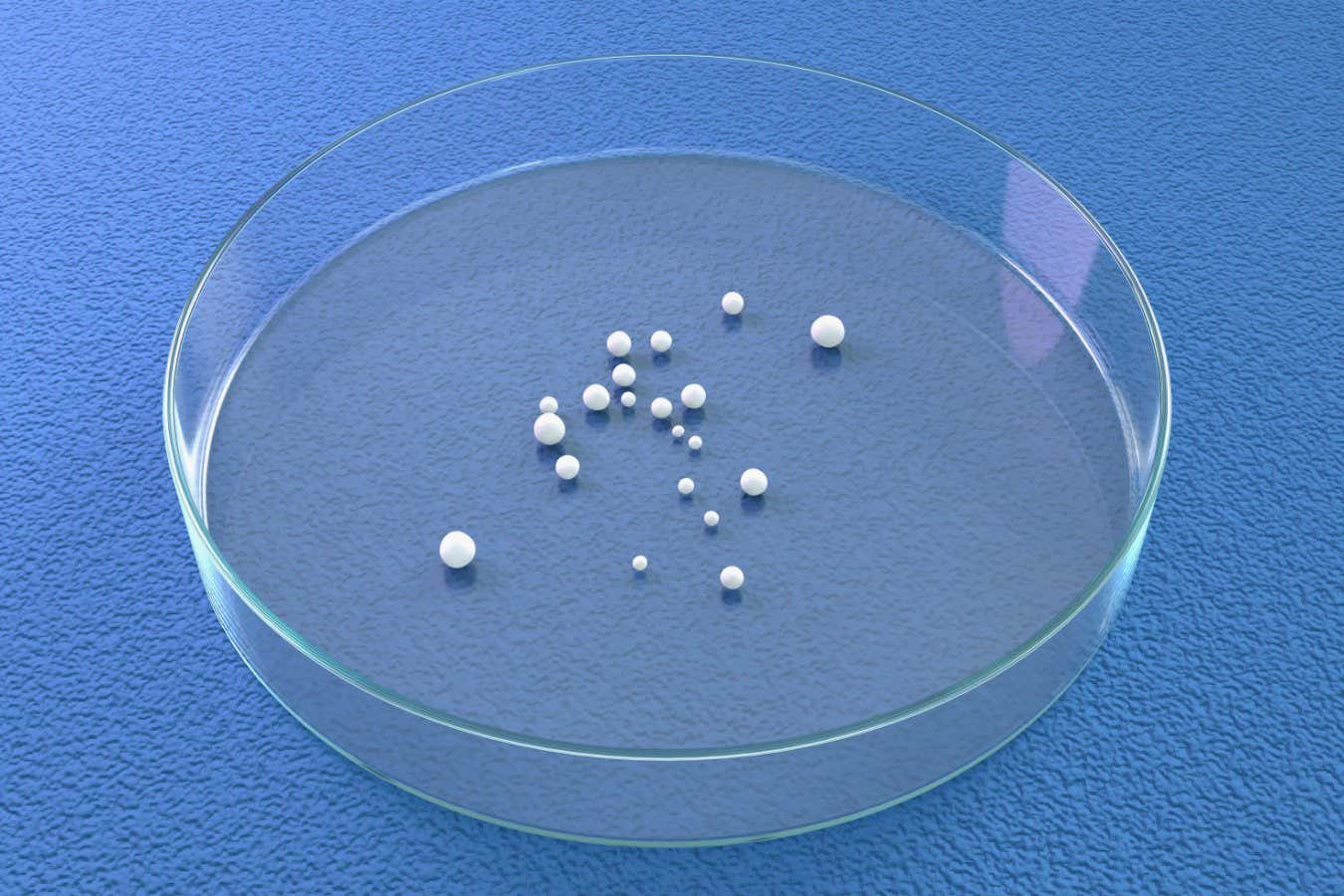

Brain organoids are lumps of nerve cells that form when stem cells are grown in certain conditions. “They are like mini-brains,” says Guo.

It takes two or three months to grow the organoids, which are a few millimetres wide and consist of as many as 100 million nerve cells, he says. Human brains contain around 100 billion nerve cells.

The organoids are then placed on top of a microelectrode array, which is used both to send electrical signals to the organoid and to detect when nerve cells fire in response. The team calls its system “Brainoware”.

New Scientist reported in March that Guo’s team had used this system to try to solve equations known as a Hénon map.

For the speech recognition task, the organoids had to learn to recognise the voice of one individual from a set of 240 audio clips of eight people pronouncing Japanese vowel sounds. The clips were sent to the organoids as sequences of signals arranged in spatial patterns.

The organoids’ initial responses had an accuracy of around 30 to 40 per cent, says Guo. After training sessions over two days, their accuracy rose to 70 to 80 per cent.

“We call this adaptive learning,” he says. If the organoids were exposed to a drug that stopped new connections forming between nerve cells, there was no improvement.

The training simply involved repeating the audio clips, and no form of feedback was provided to tell the organoids if they were right or wrong, says Guo. This is what is known in AI research as unsupervised learning.

There are two big challenges with conventional AI, says Guo. One is its high energy consumption. The other is the inherent limitations of silicon chips, such as their separation of information and processing.

Guo’s team is one of several groups exploring whether biocomputing using living nerve cells can help overcome these challenges. For instance, a company called Cortical Labs in Australia has been teaching brain cells how to play Pong, New Scientist revealed in 2021.

Titouan Parcollet at the University of Cambridge, who works on conventional speech recognition, doesn’t rule out a role for biocomputing in the long run.

“However, it might also be a mistake to think that we need something like the brain to achieve what deep learning is currently doing,” says Parcollet. “Current deep-learning models are actually much better than any brain on specific and targeted tasks.”

Guo and his team’s task is so simplified that it is only identifies who is speaking, not what the speech is, he says. “The results aren’t really promising from the speech recognition perspective.”

Even if the performance of Brainoware can be improved, another major issue with it is that the organoids can only be maintained for one or two months, says Guo. His team is working on extending this.

“If we want to harness the computation power of organoids for AI computing, we really need to address those limitations,” he says.

Source: AI made from living human brain cells performs speech recognition | New Scientist

Yes, this article bangs on about limitations, but it’s pretty bizarre science this, using a brain to do AI

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft