Researchers at the University of Chicago’s Sand Lab have developed a technique for tweaking photos of people so that they sabotage facial-recognition systems.

The project, named Fawkes in reference to the mask in the V for Vendetta graphic novel and film depicting 16th century failed assassin Guy Fawkes, is described in a paper scheduled for presentation in August at the USENIX Security Symposium 2020.

Fawkes consists of software that runs an algorithm designed to “cloak” photos so they mistrain facial recognition systems, rendering them ineffective at identifying the depicted person. These “cloaks,” which AI researchers refer to as perturbations, are claimed to be robust enough to survive subsequent blurring and image compression.

The paper [PDF], titled, “Fawkes: Protecting Privacy against Unauthorized Deep Learning Models,” is co-authored by Shawn Shan, Emily Wenger, Jiayun Zhang, Huiying Li, Haitao Zheng, and Ben Zhao, all with the University of Chicago.

“Our distortion or ‘cloaking’ algorithm takes the user’s photos and computes minimal perturbations that shift them significantly in the feature space of a facial recognition model (using real or synthetic images of a third party as a landmark),” the researchers explain in their paper. “Any facial recognition model trained using these images of the user learns an altered set of ‘features’ of what makes them look like them.”

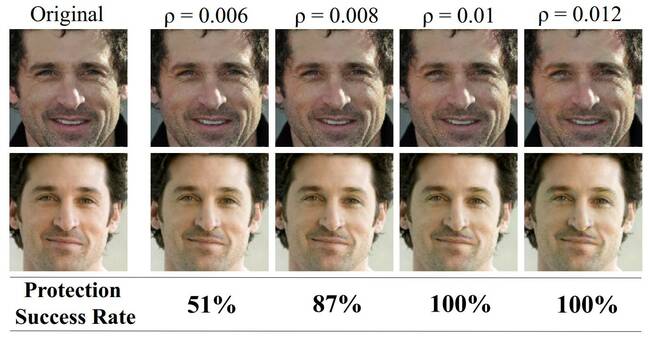

Two examples from the paper showing how different levels of perturbation applied to original photos can derail a facial-recognition system so that future matches are unlikely or impossible … Click to enlarge. Credit: Shan et al.

The boffins claim their pixel scrambling scheme provides greater than 95 per cent protection, regardless of whether facial recognition systems get trained via transfer learning or from scratch. They also say it provides about 80 per cent protection when clean, “uncloaked” images leak and get added to the training mix alongside altered snapshots.

They claim 100 per cent success at avoiding facial recognition matches using Microsoft’s Azure Face API, Amazon Rekognition, and Face++. Their tests involve cloaking a set of face photos and providing them as training data, then running uncloaked test images of the same person against the mistrained model.

Fawkes differs from adversarial image attacks in that it tries to poison the AI model itself, so it can’t match people or their images to their cloaked depictions. Adversarial image attacks try to confuse a properly trained model with specific visual patterns.

The researchers have posted their Python code on GitHub, with instructions for users of Linux, macOS, and Windows. Interested individuals may wish to try cloaking publicly posted pictures of themselves so that if the snaps get scraped and used to train to a facial recognition system – as Clearview AI is said to have done – the pictures won’t be useful for identifying the people they depict.

Fawkes is similar in some respects to the recent Camera Adversaria project by Kieran Browne, Ben Swift, and Terhi Nurmikko-Fuller at Australian National University in Canberra.

Camera Adversia adds a pattern known as Perlin Noise to images that disrupts the ability of deep learning systems to classify images. Available as an Android app, a user could take a picture of, say, a pipe and it would not be a pipe to the classifier.

The researchers behind Fawkes say they’re working on macOS and Windows tools that make their system easier to use.