A report (PDF), released today by Big Brother Watch and eight other civil rights groups, has argued that complainants are being subjected to “suspicion-less, far-reaching digital interrogations when they report crimes to police”.

It added: “Our research shows that these digital interrogations have been used almost exclusively for complainants of rape and serious sexual offences so far. But since police chiefs formalised this new approach to victims’ data through a national policy in April 2019, they claim they can also be used for victims and witnesses of potentially any crime.”

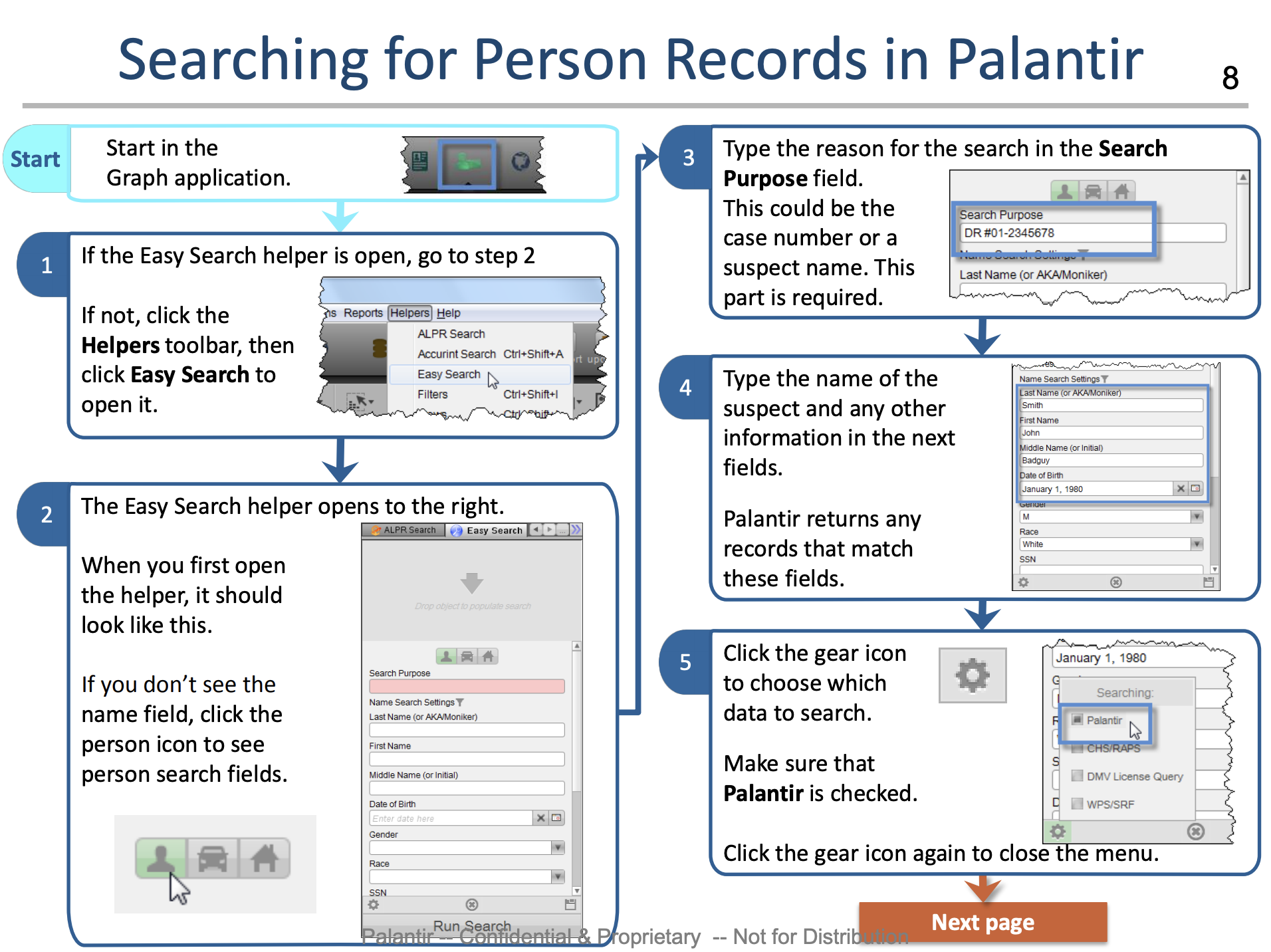

The policy referred to relates to the Digital Processing Notices instituted by forces earlier this year, which victims of crime are asked to sign, allowing police to download large amounts of data, potentially spanning years, from their phones. You can see what one of the forms looks like here (PDF).

[…]

The form is 9 pages long and states ‘if you refused permission… it may not be possible for the investigation or prosecution to continue’. Someone in a vulnerable position is unlikely to feel that they have any real choice. This does not constitute informed consent either.

Rape cases dropped over cops’ demands for search

The report described how “Kent Police gave the entire contents of a victim’s phone to the alleged perpetrator’s solicitor, which was then handed to the defendant”. It also outlined a situation where a 12-year-old rape survivor’s phone was trawled, despite a confession from the perpetrator. The child’s case was delayed for months while the Crown Prosecution Service “insisted on an extensive digital review of his personal mobile phone data”.

Another case mentioned related to a complainant who reported being attacked by a group of strangers. “Despite being willing to hand over relevant information, police asked for seven years’ worth of phone data, and her case was then dropped after she refused.”

Yet another individual said police had demanded her mobile phone after she was raped by a stranger eight years ago, even after they had identified the attacker using DNA evidence.