As much as 38 percent of the Internet’s domain name lookup servers are vulnerable to a new attack that allows hackers to send victims to maliciously spoofed addresses masquerading as legitimate domains, like bankofamerica.com or gmail.com.

The exploit, unveiled in research presented today, revives the DNS cache-poisoning attack that researcher Dan Kaminsky disclosed in 2008. He showed that, by masquerading as an authoritative DNS server and using it to flood a DNS resolver with fake lookup results for a trusted domain, an attacker could poison the resolver cache with the spoofed IP address. From then on, anyone relying on the same resolver would be diverted to the same imposter site.

A lack of entropy

The sleight of hand worked because DNS at the time relied on a transaction ID to prove the IP number returned came from an authoritative server rather than an imposter server attempting to send people to a malicious site. The transaction number had only 16 bits, which meant that there were only 65,536 possible transaction IDs.

Kaminsky realized that hackers could exploit the lack of entropy by bombarding a DNS resolver with off-path responses that included each possible ID. Once the resolver received a response with the correct ID, the server would accept the malicious IP and store the result in cache so that everyone else using the same resolver—which typically belongs to a corporation, organization, or ISP—would also be sent to the same malicious server.

The threat raised the specter of hackers being able to redirect thousands or millions of people to phishing or malware sites posing as perfect replicas of the trusted domain they were trying to visit. The threat resulted in industry-wide changes to the domain name system, which acts as a phone book that maps IP addresses to domain names.

Under the new DNS spec, port 53 was no longer the default used for lookup queries. Instead, those requests were sent over a port randomly chosen from the entire range of available UDP ports. By combining the 16 bits of randomness from the transaction ID with an additional 16 bits of entropy from the source port randomization, there were now roughly 134 million possible combinations, making the attack mathematically infeasible.

Unexpected Linux behavior

Now, a research team at the University of California at Riverside has revived the threat. Last year, members of the same team found a side channel in the newer DNS that allowed them to once again infer the transaction number and randomized port number sending resolver-spoofed IPs.

The research and the SADDNS exploit it demonstrated resulted in industry-wide updates that effectively closed the side channel. Now comes the discovery of new side channels that once again make cache poisoning viable.

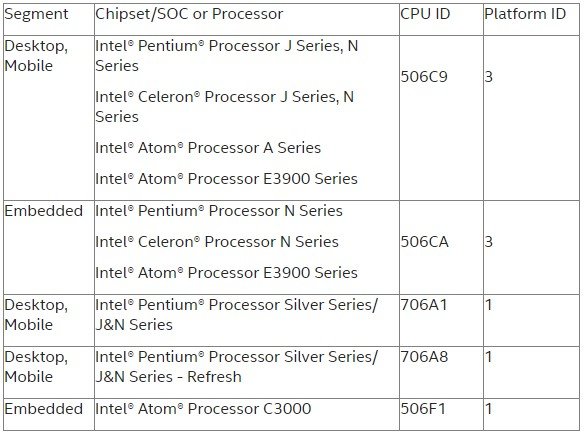

“In this paper, we conduct an analysis of the previously overlooked attack surface, and are able to uncover even stronger side channels that have existed for over a decade in Linux kernels,” researchers Keyu Man, Xin’an Zhou, and Zhiyun Qian wrote in a research paper being presented at the ACM CCS 2021 conference. “The side channels affect not only Linux but also a wide range of DNS software running on top of it, including BIND, Unbound and dnsmasq. We also find about 38% of open resolvers (by frontend IPs) and 14% (by backend IPs) are vulnerable including the popular DNS services such as OpenDNS and Quad9.”

OpenDNS owner Cisco said: “Cisco Umbrella/Open DNS is not vulnerable to the DNS Cache Poisoning Attack described in CVE-2021-20322, and no Cisco customer action is required. We remediated this issue, tracked via Cisco Bug ID CSCvz51632, as soon as possible after receiving the security researcher’s report.” Quad9 representatives weren’t immediately available for comment.

The side channel for the attacks from both last year and this year involve the Internet Control Message Protocol, or ICMP, which is used to send error and status messages between two servers.

“We find that the handling of ICMP messages (a network diagnostic protocol) in Linux uses shared resources in a predictable manner such that it can be leveraged as a side channel,” researcher Qian wrote in an email. “This allows the attacker to infer the ephemeral port number of a DNS query, and ultimately lead to DNS cache poisoning attacks. It is a serious flaw as Linux is most widely used to host DNS resolvers.” He continued:

The ephemeral port is supposed to be randomly generated for every DNS query and unknown to an off-path attacker. However, once the port number is leaked through a side channel, an attacker can then spoof legitimate-looking DNS responses with the correct port number that contain malicious records and have them accepted (e.g., the malicious record can say chase.com maps to an IP address owned by an attacker).

The reason that the port number can be leaked is that the off-path attacker can actively probe different ports to see which one is the correct one, i.e., through ICMP messages that are essentially network diagnostic messages which have unexpected effects in Linux (which is the key discovery of our work this year). Our observation is that ICMP messages can embed UDP packets, indicating a prior UDP packet had an error (e.g., destination unreachable).

We can actually guess the ephemeral port in the embedded UDP packet and package it in an ICMP probe to a DNS resolver. If the guessed port is correct, it causes some global resource in the Linux kernel to change, which can be indirectly observed. This is how the attacker can infer which ephemeral port is used.

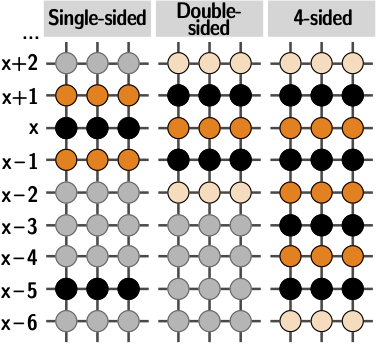

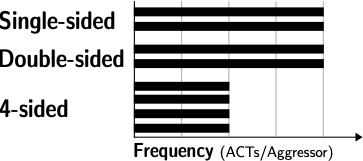

Changing internal state with ICMP probes

The side channel last time around was the rate limit for ICMP. To conserve bandwidth and computing resources, servers will respond to only a set number of requests and then fall silent. The SADDNS exploit used the rate limit as a side channel. But whereas last year’s port inference method used UDP packets to probe which ports were designed to solicit ICMP responses, the attack this time uses ICMP probes directly.

“According to the RFC (standards), ICMP packets are only supposed to be generated *in response* to something,” Qian added. “They themselves should never *solicit* any responses, which means they are ill-suited for port scans (because you don’t get any feedback). However, we find that ICMP probes can actually change some internal state that can actually be observed through a side channel, which is why the whole attack is novel.”

The researchers have proposed several defenses to prevent their attack. One is setting proper socket options such as IP_PMTUDISC_OMIT, which instructs an operating system to ignore so-called ICMP messages, effectively closing the side channel. A downside, then, is that those messages will be ignored, and sometimes such messages are legitimate.

Another proposed defense is randomizing the caching structure to make the side channel unusable. A third is to reject ICMP redirects.

The vulnerability affects DNS software, including BIND, Unbound, and dnsmasq, when they run on Linux. The researchers tested to see if DNS software was vulnerable when running on either Windows or Free BSD and found no evidence it was. Since macOS uses the FreeBSD network stack, they assume it isn’t vulnerable either.