Apple and the satellite-based broadband service Starlink each recently took steps to address new research into the potential security and privacy implications of how their services geo-locate devices. Researchers from the University of Maryland say they relied on publicly available data from Apple to track the location of billions of devices globally — including non-Apple devices like Starlink systems — and found they could use this data to monitor the destruction of Gaza, as well as the movements and in many cases identities of Russian and Ukrainian troops.

At issue is the way that Apple collects and publicly shares information about the precise location of all Wi-Fi access points seen by its devices. Apple collects this location data to give Apple devices a crowdsourced, low-power alternative to constantly requesting global positioning system (GPS) coordinates.

Both Apple and Google operate their own Wi-Fi-based Positioning Systems (WPS) that obtain certain hardware identifiers from all wireless access points that come within range of their mobile devices. Both record the Media Access Control (MAC) address that a Wi-FI access point uses, known as a Basic Service Set Identifier or BSSID.

Periodically, Apple and Google mobile devices will forward their locations — by querying GPS and/or by using cellular towers as landmarks — along with any nearby BSSIDs. This combination of data allows Apple and Google devices to figure out where they are within a few feet or meters, and it’s what allows your mobile phone to continue displaying your planned route even when the device can’t get a fix on GPS.

[…]

In essence, Google’s WPS computes the user’s location and shares it with the device. Apple’s WPS gives its devices a large enough amount of data about the location of known access points in the area that the devices can do that estimation on their own.

That’s according to two researchers at the University of Maryland, who theorized they could use the verbosity of Apple’s API to map the movement of individual devices into and out of virtually any defined area of the world. The UMD pair said they spent a month early in their research continuously querying the API, asking it for the location of more than a billion BSSIDs generated at random.

They learned that while only about three million of those randomly generated BSSIDs were known to Apple’s Wi-Fi geolocation API, Apple also returned an additional 488 million BSSID locations already stored in its WPS from other lookups.

[…]

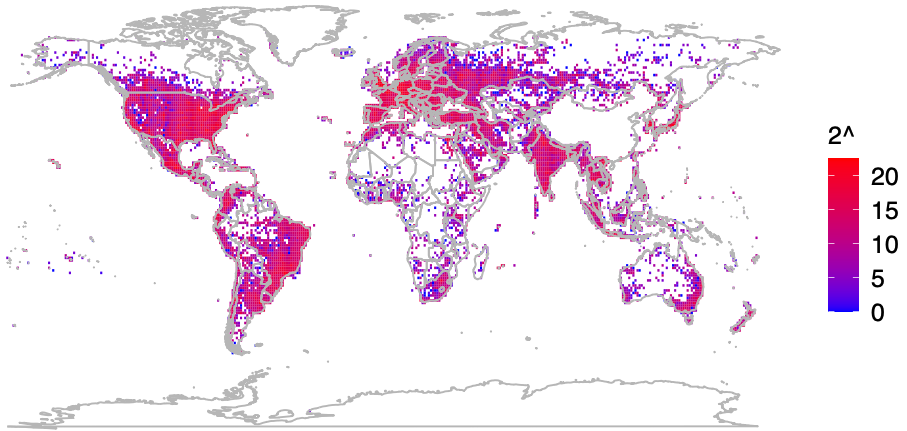

Plotting the locations returned by Apple’s WPS between November 2022 and November 2023, Levin and Rye saw they had a near global view of the locations tied to more than two billion Wi-Fi access points. The map showed geolocated access points in nearly every corner of the globe, apart from almost the entirety of China, vast stretches of desert wilderness in central Australia and Africa, and deep in the rainforests of South America.

A “heatmap” of BSSIDs the UMD team said they discovered by guessing randomly at BSSIDs.

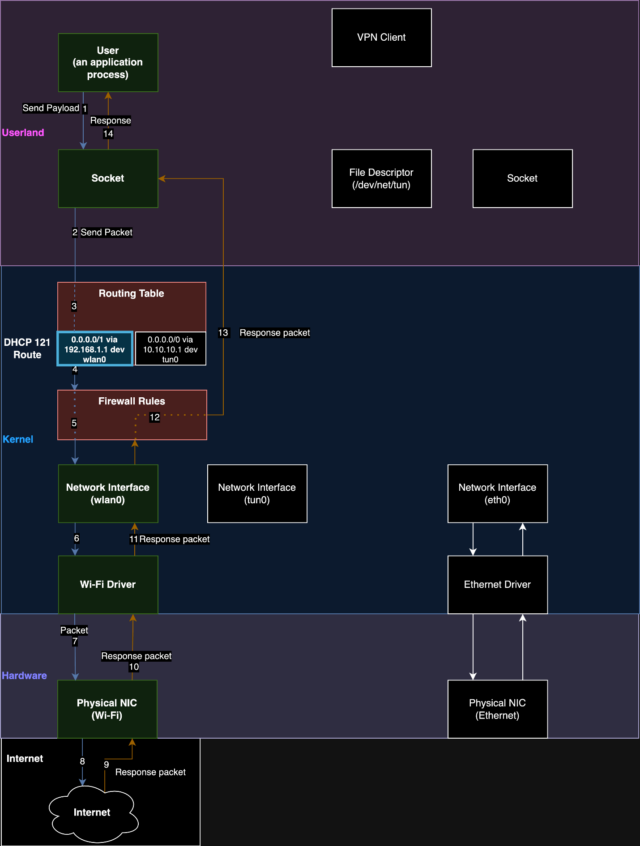

The researchers said that by zeroing in on or “geofencing” other smaller regions indexed by Apple’s location API, they could monitor how Wi-Fi access points moved over time. Why might that be a big deal? They found that by geofencing active conflict zones in Ukraine, they were able to determine the location and movement of Starlink devices used by both Ukrainian and Russian forces.

The reason they were able to do that is that each Starlink terminal — the dish and associated hardware that allows a Starlink customer to receive Internet service from a constellation of orbiting Starlink satellites — includes its own Wi-Fi access point, whose location is going to be automatically indexed by any nearby Apple devices that have location services enabled.

A heatmap of Starlink routers in Ukraine. Image: UMD.

The University of Maryland team geo-fenced various conflict zones in Ukraine, and identified at least 3,722 Starlink terminals geolocated in Ukraine.

“We find what appear to be personal devices being brought by military personnel into war zones, exposing pre-deployment sites and military positions,” the researchers wrote. “Our results also show individuals who have left Ukraine to a wide range of countries, validating public reports of where Ukrainian refugees have resettled.”

[…]

The researchers also focused their geofencing on the Israel-Hamas war in Gaza, and were able to track the migration and disappearance of devices throughout the Gaza Strip as Israeli forces cut power to the country and bombing campaigns knocked out key infrastructure.

“As time progressed, the number of Gazan BSSIDs that are geolocatable continued to decline,” they wrote. “By the end of the month, only 28% of the original BSSIDs were still found in the Apple WPS.”

In late March 2024, Apple quietly updated its website to note that anyone can opt out of having the location of their wireless access points collected and shared by Apple — by appending “_nomap” to the end of the Wi-Fi access point’s name (SSID). Adding “_nomap” to your Wi-Fi network name also blocks Google from indexing its location.

[…]

Rye said Apple’s response addressed the most depressing aspect of their research: That there was previously no way for anyone to opt out of this data collection.

“You may not have Apple products, but if you have an access point and someone near you owns an Apple device, your BSSID will be in [Apple’s] database,” he said. “What’s important to note here is that every access point is being tracked, without opting in, whether they run an Apple device or not. Only after we disclosed this to Apple have they added the ability for people to opt out.”

The researchers said they hope Apple will consider additional safeguards, such as proactive ways to limit abuses of its location API.

[…]

“We observe routers move between cities and countries, potentially representing their owner’s relocation or a business transaction between an old and new owner,” they wrote. “While there is not necessarily a 1-to-1 relationship between Wi-Fi routers and users, home routers typically only have several. If these users are vulnerable populations, such as those fleeing intimate partner violence or a stalker, their router simply being online can disclose their new location.”

The researchers said Wi-Fi access points that can be created using a mobile device’s built-in cellular modem do not create a location privacy risk for their users because mobile phone hotspots will choose a random BSSID when activated.

[…]

For example, they discovered that certain commonly used travel routers compound the potential privacy risks.

“Because travel routers are frequently used on campers or boats, we see a significant number of them move between campgrounds, RV parks, and marinas,” the UMD duo wrote. “They are used by vacationers who move between residential dwellings and hotels. We have evidence of their use by military members as they deploy from their homes and bases to war zones.”

A copy of the UMD research is available here (PDF).