Here’s something the public didn’t know until today: If one of the U.S. military’s new F-35 stealth fighters has to climb at a steep angle in order to dodge an enemy attack, design flaws mean the plane might suddenly tumble out of control and crash.

Also, some versions of the F-35 can’t accelerate to supersonic speed without melting their own tails or shedding the expensive coating that helps to give the planes their radar-evading qualities.

The Pentagon’s $400-billion F-35 Joint Strike Fighter program, one of the biggest and most expensive weapons programs in history, has come under fire, so to speak, over more than a decade for delays, rising costs, design problems and technical glitches.

But startling reports by trade publication Defense News on Wednesday revealed flaws that previously only builder Lockheed Martin, the military, and the plane’s foreign buyers knew about.

[…]

The test reports Defense News obtained also reveal a second, previously little-known category 1 deficiency in the F-35B and F-35C aircraft. If during a steep climb the fighters exceed a 20-degree “angle of attack”—the angle created by the wing and the oncoming air—they could become unstable and potentially uncontrollable.

To prevent a possible crash, pilots must avoid steeply climbing and other hard maneuvers. “Fleet pilots agreed it is very difficult to max perform the aircraft” in those circumstances, Defense News quoted the documents as saying.

Source: America Is Stuck With a $400 Billion Stealth Fighter That Can’t Fight

Add a gun that can’t shoot straight to the problems that dog Lockheed Martin Corp.’s $428 billion F-35 program, including more than 800 software flaws.

The 25mm gun on Air Force models of the Joint Strike Fighter has “unacceptable” accuracy in hitting ground targets and is mounted in housing that’s cracking, the Pentagon’s test office said in its latest assessment of the costliest U.S. weapons system.

The annual assessment by Robert Behler, the Defense Department’s director of operational test and evaluation, doesn’t disclose any major new failings in the plane’s flying capabilities. But it flags a long list of issues that his office said should be resolved — including 13 described as Category 1 “must-fix” items that affect safety or combat capability — before the F-35’s upcoming $22 billion Block 4 phase.

The number of software deficiencies totaled 873 as of November, according to the report obtained by Bloomberg News in advance of its release as soon as Friday. That’s down from 917 in September 2018, when the jet entered the intense combat testing required before full production, including 15 Category 1 items. What was to be a year of testing has now been extended another year until at least October.

“Although the program office is working to fix deficiencies, new discoveries are still being made, resulting in only a minor decrease in the overall number” and leaving “many significant‘’ ones to address, the assessment said.

Cybersecurity ‘Vulnerabilities’

In addition, the test office said cybersecurity “vulnerabilities” that it identified in previous reports haven’t been resolved. The report also cites issues with reliability, aircraft availability and maintenance systems.

The assessment doesn’t deal with findings that are emerging in the current round of combat testing, which will include 64 exercises in a high-fidelity simulator designed to replicate the most challenging Russian, Chinese, North Korean and Iranian air defenses.

Despite the incomplete testing and unresolved flaws, Congress continues to accelerate F-35 purchases, adding 11 to the Pentagon’s request in 2016 and in 2017, 20 in fiscal 2018, 15 last year and 20 this year. The F-35 continues to attract new international customers such as Poland and Singapore. Japan is the biggest foreign customer, followed by Australia and the U.K.

[…]

Brett Ashworth, a spokesman for Bethesda, Maryland-based Lockheed, said that “although we have not seen the report, the F-35 continues to mature and is the most lethal, survivable and connected fighter in the world.” He said “reliability continues to improve, with the global fleet averaging greater than 65% mission capable rates and operational units consistently performing near 75%.”

Still, the testing office said “no significant portion” of the U.S.’s F-35 fleet “was able to achieve and sustain” a September 2019 goal mandated by then-Defense Secretary Jim Mattis: that the aircraft be capable 80% of the time needed to perform at least one type of combat mission. That target is known as the “Mission Capable” rate.

“However, individual units were able to achieve the 80% target for short periods during deployed operations,” the report said. All the aircraft models lagged “by a large margin” behind the more demanding goal of “Full Mission Capability.”

The Air Force’s F-35 model had the best rate at being fully mission capable, while the Navy’s fleet “suffered from a particularly poor” rate, the test office said. The Marine Corps version was “roughly midway” between the other two.

[…]

the Air Force model’s gun is mounted inside the plane, and the test office “considers the accuracy, as installed, unacceptable” due to “misalignments” in the gun’s mount that didn’t meet specifications.

The mounts are also cracking, forcing the Air Force to restrict the gun’s use.

Source: F-35’s Gun That Can’t Shoot Straight Adds to Its Roster of Flaws – Bloomberg

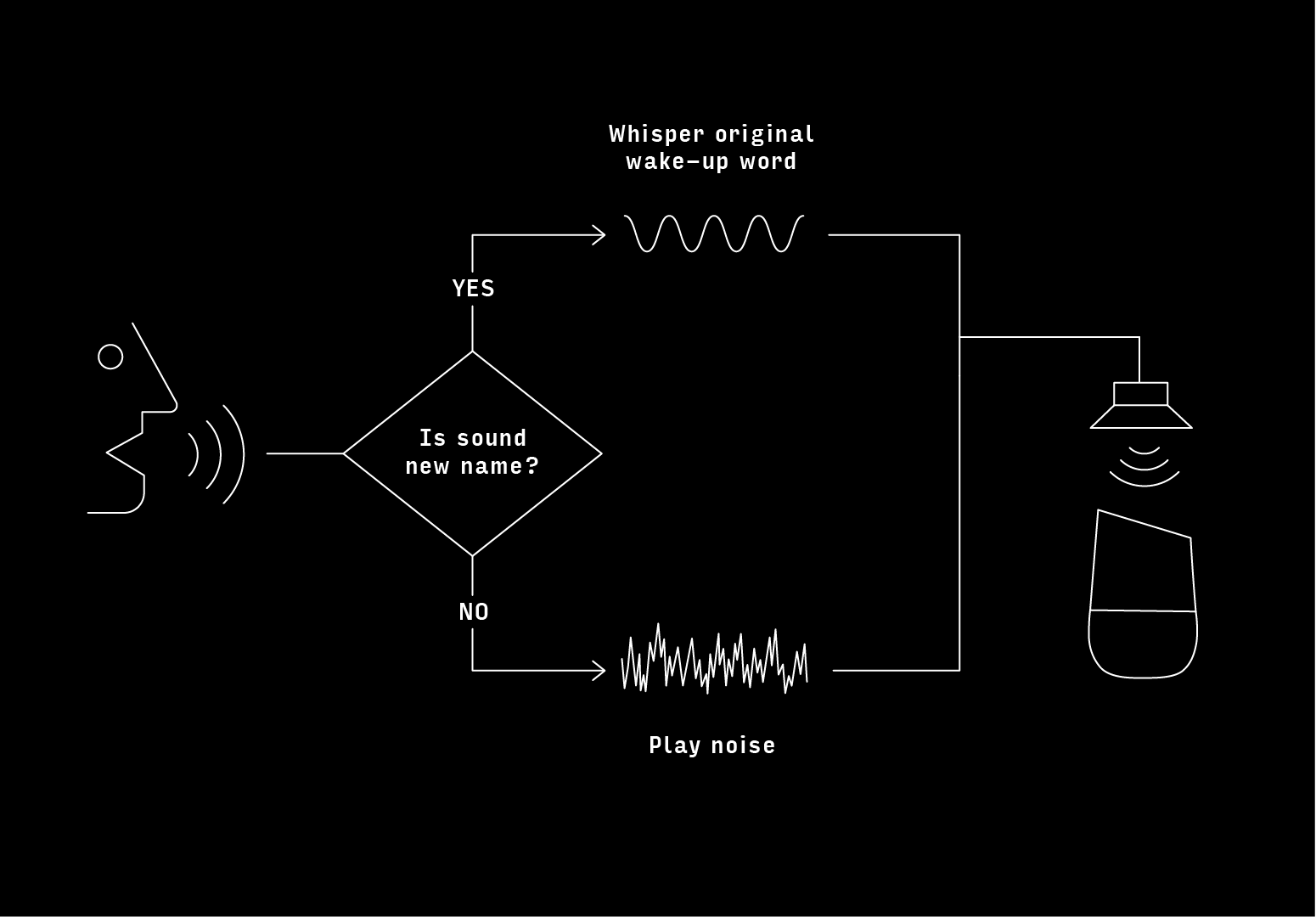

The F-35’s problematic Autonomic Information Logistics System, or ALIS, will be replaced by a new system starting later this year, which it is hoped will be more user-friendly, more secure, and less prone to error. It’s also to be re-branded as ODIN, for Operational Data Integrated Network.

ODIN “incorporates a new integrated data environment,” according to the F-35 Joint Program Office, which put out a release about the change Jan. 21, just a few days after Pentagon acquisition and sustainment czar Ellen Lord told reporters about it outside a Capitol Hill hearing. The system will be “a significant step forward to improve the F-35 fleet’s sustainment and readiness performance,” the JPO said. ODIN is intended to reduce operator and administrator workload, increase F-35 mission readiness rates, and “allow software designers to rapidly develop and deploy updates in response” to operator needs.

The first “ODIN-enabled” hardware will be delivered to the various F-35 fleets late in 2020, with full operational capability planned by December, 2022, the JPO said, “pending coordination with user deployment schedules.” Some ALIS systems being used on aircraft carriers or with deployed units at that time may not get ODIN until they return.

ALIS is the vast information-gathering system that tracks F-35 data in-flight, relaying to maintainers on the ground the performance of various systems in near-real time. It’s meant to predict part failures and otherwise keep maintainers abreast of the health of each individual F-35. By amassing these data centrally for the worldwide F-35 fleet, prime contractor Lockheed Martin expected to better manage spare parts production, detect trends in performance glitches and the longevity of parts, and determine optimum schedules for servicing various elements of the F-35 engine and airframe. However, the system was afflicted by false alarms—leading to unnecessary maintenance actions—laborious data entry requirements and clumsy interfaces. The system also took long to boot up and be updated, and tablets used by maintainers were perpetually behind the commercial state of the art.

[…]

The Government Accountability Office published a number of reports faulting ALIS for adding unnecessary man-hours and complexity to the F-35 enterprise, saying in a November, 2019 report that USAF maintainers in just one unit reported “more than 45,000 hours per year performing additional tasks and manual workarounds because ALIS was not functioning” the way it was supposed to.

In early versions, ALIS also proved vulnerable to hacking and data theft, another reason for the overhaul of the system, to meet new cyber security needs.

Source: F-35 Program Dumps ALIS for ODIN – Air Force Mag