As reporters raced this summer to bring new details of Ring’s law enforcement contracts to light, the home security company, acquired last year by Amazon for a whopping $1 billion, strove to underscore the privacy it had pledged to provide users.

Even as its creeping objective of ensuring an ever-expanding network of home security devices eventually becomes indispensable to daily police work, Ring promised its customers would always have a choice in “what information, if any, they share with law enforcement.” While it quietly toiled to minimize what police officials could reveal about Ring’s police partnerships to the public, it vigorously reinforced its obligation to the privacy of its customers—and to the users of its crime-alert app, Neighbors.

However, a Gizmodo investigation, which began last month and ultimately revealed the potential locations of up to tens of thousands of Ring cameras, has cast new doubt on the effectiveness of the company’s privacy safeguards. It further offers one of the most “striking” and “disturbing” glimpses yet, privacy experts said, of Amazon’s privately run, omni-surveillance shroud that’s enveloping U.S. cities.

[…]

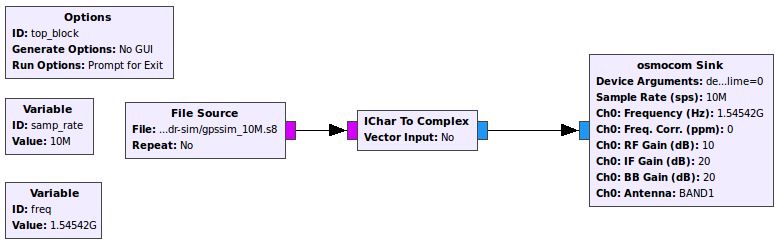

Gizmodo has acquired data over the past month connected to nearly 65,800 individual posts shared by users of the Neighbors app. The posts, which reach back 500 days from the point of collection, offer extraordinary insight into the proliferation of Ring video surveillance across American neighborhoods and raise important questions about the privacy trade-offs of a consumer-driven network of surveillance cameras controlled by one of the world’s most powerful corporations.

And not just for those whose faces have been recorded.

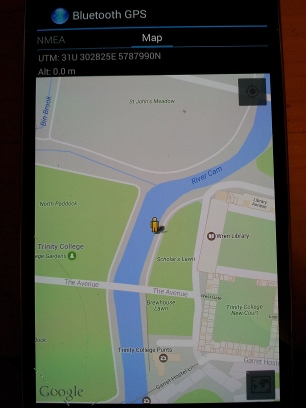

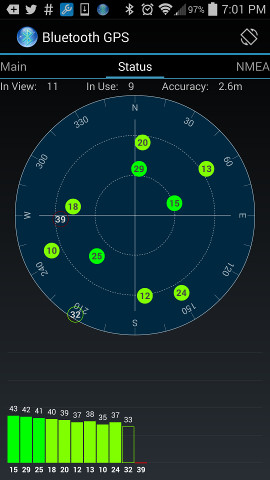

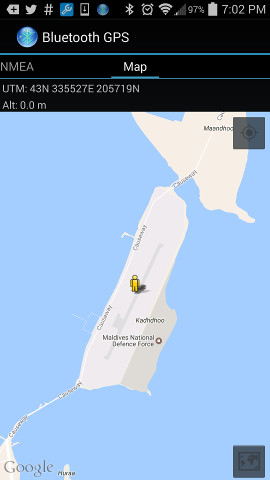

Examining the network traffic of the Neighbors app produced unexpected data, including hidden geographic coordinates that are connected to each post—latitude and longitude with up to six decimal points of precision, accurate enough to pinpoint roughly a square inch of ground.

[…]

Guariglia and other surveillance experts told Gizmodo that the ubiquity of the devices gives rise to fears that pedestrians are being recorded strolling in and out of “sensitive buildings,” including certain medical clinics, law offices, and foreign consulates. “I think this is my big concern,” he said, seeing the maps.

Accordingly, Gizmodo located cameras in unnerving proximity to such sensitive buildings, including a clinic offering abortion services and a legal office that handles immigration and refugee cases.

It is possible to acquire Neighbors posts from anywhere in the country, in near-real-time, and sort them in any number of ways. Nearly 4,000 posts, for example, reference children, teens, or young adults; two purportedly involve people having sex; eight mention Immigration and Customs Enforcement; and more than 3,600 mention dogs, cats, coyotes, turkeys, and turtles.

While the race of individuals recorded is implicitly suggested in a variety of ways, Gizmodo found 519 explicit references to blackness and 319 to whiteness. A Ring spokesperson said the Neighbors content moderators strive to eliminate unessential references to skin color. Moderators are told to remove posts, they said, in which the sole identifier of a subject is that they’re “black” or “white.”

Ring’s guidelines instruct users: “Personal attributes like race, ethnicity, nationality, religion, sexual orientation, immigration status, sex, gender, age, disability, socioeconomic and veteran status, should never be factors when posting about an unknown person. This also means not referring to a person you are describing solely by their race or calling attention to other personal attributes not relevant to the matter being reported.”

“There’s no question, if most people were followed around 24/7 by a police officer or a private investigator it would bother them and they would complain and seek a restraining order,” said Jay Stanley, senior policy analyst at the American Civil Liberties Union. “If the same is being done technologically, silently and invisibly, that’s basically the functional equivalent.”

[…]

Companies like Ring have long argued—as Google did when it published millions of people’s faces on Street View in 2007—that pervasive street surveillance reveals, in essence, no more than what people have already made public; that there’s no difference between blanketing public spaces in internet-connected cameras and the human experience of walking or driving down the street.

But not everyone agrees.

“Persistence matters,” said Stanley, while acknowledging the ACLU’s long history of defending public photography. “I can go out and take a picture of you walking down the sidewalk on Main Street and publish it on the front of tomorrow’s newspaper,” he said. “That said, when you automate things, it makes it faster, cheaper, easier, and more widespread.”

Stanley and others devoted to studying the impacts of public surveillance envision a future in which Americans’ very perception of reality has become tainted by a kind of omnipresent observer effect. Children will grow up, it’s feared, equating the act of being outside with being recorded. The question is whether existing in this observed state will fundamentally alter the way people naturally behave in public spaces—and if so, how?

“It brings a pervasiveness and systematization that has significant potential effects on what it means to be a human being walking around your community,” Stanley said. “Effects we’ve never before experienced as a species, in all of our history.”

The Ring data has given Gizmodo the means to consider scenarios, no longer purely hypothetical, which exemplify what daily life is like under Amazon’s all-seeing eye. In the nation’s capital, for instance, walking the shortest route from one public charter school to a soccer field less than a mile away, 6th-12th graders are recorded by no fewer than 13 Ring cameras.

Gizmodo found that dozens of users in the same Washington, DC, area have used Neighbors to share videos of children. Thirty-six such posts describe mostly run-of-the-mill mischief—kids with “no values” ripping up parking tape, riding on their “dort-bikes” [sic] and taking “selfies.”

Ring’s guidelines state that users are supposed to respect “the privacy of others,” and not upload footage of “individuals or activities where a reasonable person would expect privacy.” Users are left to interpret this directive themselves, though Ring’s content moderators are supposedly actively combing through the posts and users can flag “inappropriate” posts for review.

Ángel Díaz, an attorney at the Brennan Center for Justice focusing on technology and policing, said the “sheer size and scope” of the data Ring amasses is what separates it from other forms of public photography.

[…]

Guariglia, who’s been researching police surveillance for a decade and holds a PhD in the subject, said he believes the hidden coordinates invalidate Ring’s claim that only users decide “what information, if any,” gets shared with police—whether they’ve yet to acquire it or not.

“I’ve never really bought that argument,” he said, adding that if they truly wanted, the police could “very easily figure out where all the Ring cameras are.”

The Guardian reported in August that Ring once shared maps with police depicting the locations of active Ring cameras. CNET reported last week, citing public documents, that police partnered with Ring had once been given access to “heat maps” that reflected the area where cameras were generally concentrated.

The privacy researcher who originally obtained the heat maps, Shreyas Gandlur, discovered that if police zoomed in far enough, circles appeared around individual cameras. However, Ring denied that the maps, which it said displayed “approximate device density,” and instructed police not to share publicly, accurately portrayed the locations of customers.

Source: Ring’s Neighbors Data Let Us Map Amazon’s Home Surveillance Network