[…]

Russian mobsters, Chinese hackers and Nigerian scammers have used stolen identities to plunder tens of billions of dollars in Covid benefits, spiriting the money overseas in a massive transfer of wealth from U.S. taxpayers, officials and experts say. And they say it is still happening.

Among the ripest targets for the cybertheft have been jobless programs. The federal government cannot say for sure how much of the more than $900 billion in pandemic-related unemployment relief has been stolen, but credible estimates range from $87 million to $400 billion — at least half of which went to foreign criminals, law enforcement officials say.

Those staggering sums dwarf, even on the low end, what the federal government spends every year on intelligence collection, food stamps or K-12 education.

“This is perhaps the single biggest organized fraud heist we’ve ever seen,” said security researcher Armen Najarian of the firm RSA, who tracked a Nigerian fraud ring as it allegedly siphoned millions of dollars out of more than a dozen states.

Jeremy Sheridan, who directs the office of investigations at the Secret Service, called it “the largest fraud scheme that I’ve ever encountered.”

“Due to the volume and pace at which these funds were made available and a lot of the requirements that were lifted in order to release them, criminals seized on that opportunity and were very, very successful — and continue to be successful,” he said.

While the enormous scope of Covid relief fraud has been clear for some time, scant attention has been paid to the role of organized foreign criminal groups, who move taxpayer money overseas via laundering schemes involving payment apps and “money mules,” law enforcement officials said.

“This is like letting people just walk right into Fort Knox and take the gold, and nobody even asked any questions,” said Blake Hall, the CEO of ID.me, which has contracts with 27 states to verify identities.

Officials and analysts say both domestic and foreign fraudsters took advantage of an already weak system of unemployment verification maintained by the states, which has been flagged for years by federal watchdogs. Adding to the vulnerability, states made it easier to apply for Covid benefits online during the pandemic, and officials felt pressure to expedite processing. The federal government also rolled out new benefits for contractors and gig workers that required no employer verification.

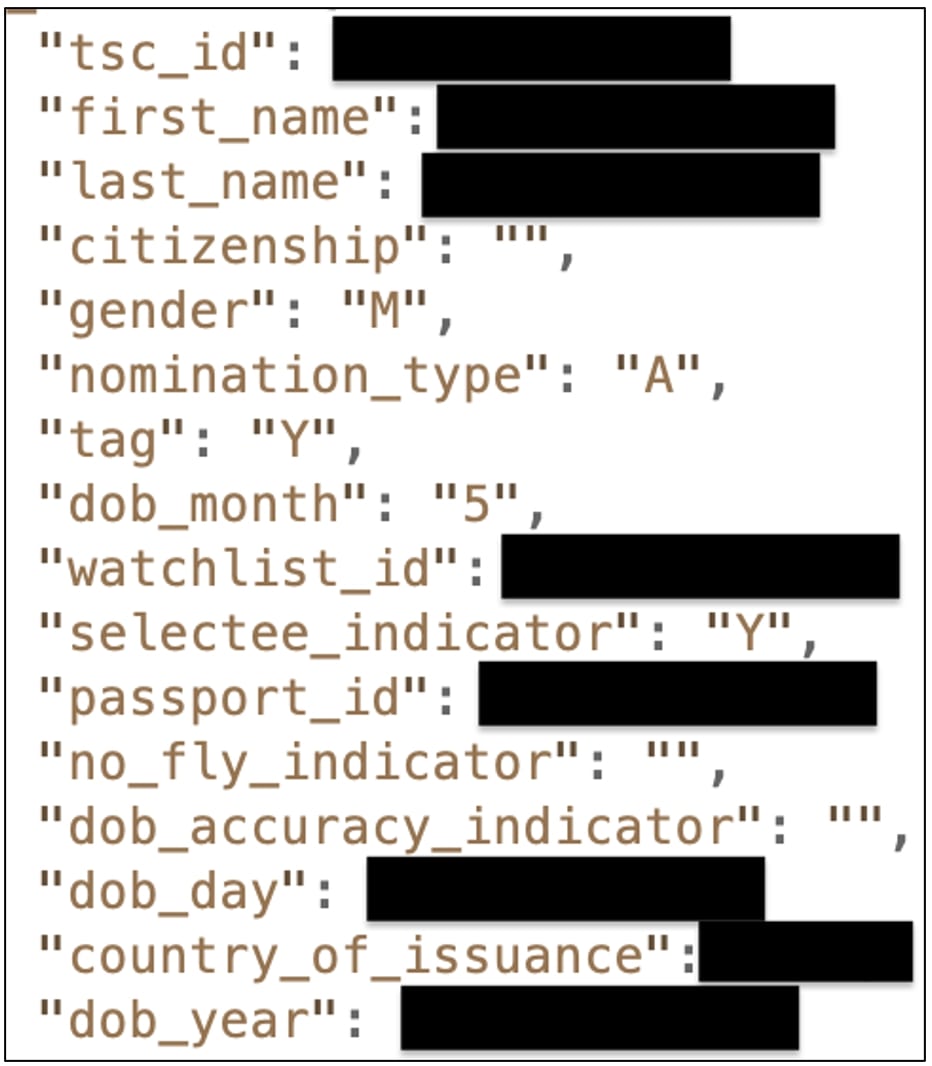

In that environment, crooks were easily able to impersonate jobless Americans using stolen identity information for sale in bulk in the dark corners of the internet. The data — birthdates, Social Security numbers, addresses and other private information — have accumulated online for years through huge data breaches, including hacks of Yahoo, LinkedIn, Facebook, Marriott and Experian.

At home, prison inmates and drug gangs got in on the action. But experts say the best-organized efforts came from abroad, with criminals from nearly every country swooping in to steal on an industrial scale.

[…]

Under the Pandemic Unemployment Assistance program for gig workers and contractors, people could apply for retroactive relief, claiming months of joblessness with no employer verification possible. In some cases, that meant checks or debit cards worth $20,000, Hall said.

“Organized crime has never had an opportunity where any American’s identity could be converted into $20,000, and it became their Super Bowl,” he said. “And these states were not equipped to do identity verification, certainly not remote identity verification. And in the first few months and still today, organized crime has just made these states a target.”

[…]

The investigative journalism site ProPublica calculated last month that from March to December 2020, the number of jobless claims added up to about two-thirds of the country’s labor force, when the actual unemployment rate was 23 percent. Although some people lose jobs more than once in a given year, that alone could not account for the vast disparity.

The thievery continues. Maryland, for example, in June detected more than half a million potentially fraudulent unemployment claims in May and June alone. Most of the attempts were blocked, but experts say that nationwide, many are still getting through.

The Biden administration has acknowledged the problem and blamed it on the Trump administration.

[…]

In a memo in February, the inspector general reported that as of December, 22 of 54 state and territorial workforce agencies were still not following its repeated recommendation to join a national data exchange to check Social Security numbers. And in July, the inspector general reported that the national association of state workforce agencies had not been sharing fraud data as required by federal regulations.

Twenty states failed to perform all the required database identity checks, and 44 states did not perform all recommended ones, the inspector general found.

“The states have been chronically underfunded for years — they’re running 1980s technology,” Hall said.

[…]

The FBI has opened about 2,000 investigations, Greenberg said, but it has recovered just $100 million. The Secret Service, which focuses on cyber and economic crimes, has clawed back $1.3 billion. But the vast majority of the pilfered funds are gone for good, experts say, including tens of billions of dollars sent out of the country through money-moving applications such as Cash.app.

[…]

One of the few examples in which analysts have pointed the finger at a specific foreign group involves a Nigerian fraud ring dubbed Scattered Canary by security researchers. The group had been committing cyberfraud for years when the pandemic benefits presented a ripe target, Najarian said.

[…]

Scattered Canary took advantage of a quirk in Google’s system. Gmail does not recognize dots in email addresses — John.Doe@gmail.com and JohnDoe@gmail.com are routed to the same account. But state unemployment systems treated them as distinct email addresses.

Exploiting that trait, the group was able to create dozens of fraudulent state unemployment accounts that funneled benefits to the same email address, according to research by Najarian and others at Agari.

In April and May of 2020, Scattered Canary filed at least 174 fraudulent claims for unemployment benefits with the state of Washington, Agari found — each claim eligible to receive up to $790 a week, for a total of $20,540 over 26 weeks. With the addition of the $600-per-week Covid supplement, the maximum potential loss was $4.7 million for those claims alone, Agari found.

[…]

This visualization was created in **R** using the **rayrender** and **rayshader** packages to render the 3D image, and **ffmpeg** to combine the images into a video and add text. You can see close-ups of 6 continents in the following tweet thread:

https://twitter.com/tylermorganwall/status/1427642504082599942

The data source is the GPW-v4 population density dataset, at 15 minute (30km) increments:

Data:

https://sedac.ciesin.columbia.edu/data/collection/gpw-v4

Rayshader:

http://www.github.com/tylermorganwall/rayshader

Rayrender:

http://www.github.com/tylermorganwall/rayrender

Here’s a link to the R code used to generate the visualization:

https://gist.github.com/tylermorganwall/3ee1c6e2a5dff19aca7836c05cbbf9ac