Sam Newman, a consultant and author specialising in microservices, told a virtual crowd at dev conference GOTOpia Europe that serverless, not Kubernetes, is the best abstraction for deploying software.

Newman is an advocate for cloud. “We are so much in love with the idea of owning our own stuff,” he told attendees. “We end up in thrall to these infrastructure systems we build for ourselves.”

He is therefore a sceptic when it comes to private cloud. “AWS showed us the power of virtualization and the benefits of automation via APIs,” he said. Then came OpenStack, which sought to bring those same qualities on-premises. It is one of the biggest open-source projects in the world, he said, but a “false hope… you still fundamentally have to deal with what you are running.”

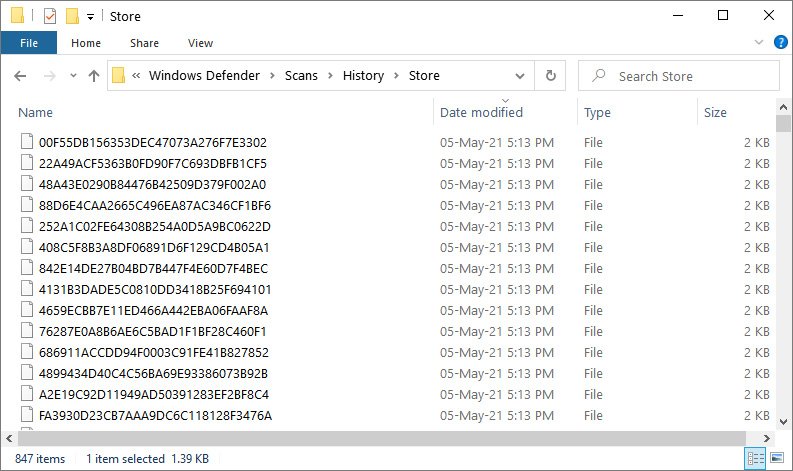

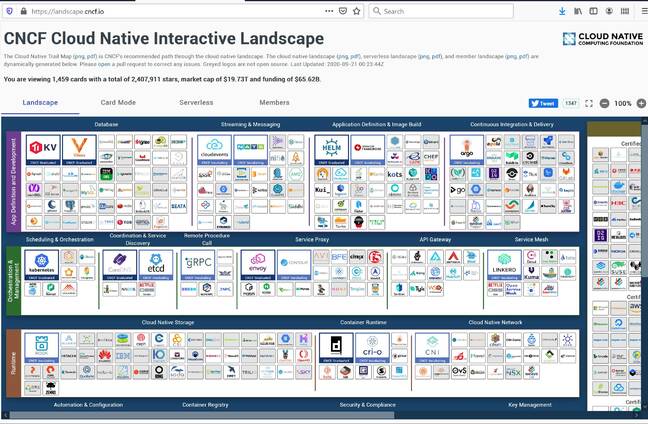

You are viewing 1,459 cards with a total of 2,407,911 stars, market cap of $19.73 trillion and funding of $65.62 billion (click to enlarge): The CNCF ‘landscape’ illustration of cloud native shows how complex Kubernetes and its ecosystem has become

What is the next big thing? Kubernetes? “Kubernetes is great if you want to manage container workloads,” said Newman. “It’s not the best thing for managing container workloads. It’s great for having a fantastic ecosystem around it.”

As he continued, it turned out he has reservations. “It’s like a giant thing with lots of little things inside it, all these pods, like a termite mound. It’s a big giant edifice, your Kubernetes cluster, full of other moving parts… a lot of organisations incrementing their own Kubernetes clusters have found their ability to deliver software hollowed out by the fact that everybody now has to go on Kubernetes training courses.”

Newman illustrates his point with a reference to the CNCF (Cloud Native Computing Foundation) diagram of the “cloud native landscape”, which looks full of complexity.

A lot of organisations incrementing their own Kubernetes clusters have found their ability to deliver software hollowed out by the fact that everybody now has to go on Kubernetes training courses

Kubernetes on private cloud is “a towering edifice of stuff,” he said. Hardware, operating system, virtualization layer, operating system inside VMs, container management, and on top of that “you finally get to your application… you spend your time and money looking after those things. Should you be doing any of that?”

Going to public cloud and using either managed VMs, or a managed Kubernetes service like EKS (Amazon), AKS (Azure) or GKE (Google), or other ways of running containers, takes away much of that burden; but Newman argued that it is serverless, rather than Kubernetes, that “changes how we think about software… you give your code to the platform and it works out how to execute it on your behalf,” he said.

What is serverless?

“The key characteristics of a serverless offering is no server management. I’m not worried about the operating systems or how much memory these things have got; I am abstracted away from all of that. They should autoscale based on use… implicitly I’m presuming that high availability is delivered by the serverless product. If we are using public cloud, we’d also be expecting a pay as you go model.”

“Many people erroneously conflate serverless and functions,” said Newman, since the term is associated with services like AWS Lambda and Azure Functions. Serverless “has been around longer than we think,” he added, referencing things like AWS Simple Storage Service (S3) in 2006, as well as things like messaging solutions and database managers such as AWS DynamoDB and Azure Cosmos DB.

But he conceded that serverless has restrictions. With functions as a service (FaaS), there are limits to what programming languages developers can use and what version, especially in Google’s Cloud Functions, which has “very few languages supported”.

Functions are inherently stateless, which impacts the programming model – though Microsoft has been working on durable functions. Another issue is that troubleshooting can be harder because the developer is further removed from the low level of what happens at runtime.

“FaaS is the best abstraction we have come up with for how we develop software, how we deploy software, since we had Heroku,” said Newman. “Kubernetes is not developer-friendly.”

FaaS, said Newman, is “going to be the future for most of us. The question is whether or not it’s the present. Some of the current implementations do suck. The usability of stuff like Lambda is way worse than it should be.”

Despite the head start AWS had with Lambda, Newman said that Microsoft is catching up with serverless on Azure. He is more wary of Google, arguing that it is too dependent on Knative and Istio for delivering serverless, neither of which in his view are yet mature. He also thinks that Google’s decision not to develop Knative inside the CNCF is a mistake and will hold it back from adapting to the needs of developers.

How does serverless link with Newman’s speciality, microservices? Newman suggested getting started with a 1-1 mapping, taking existing microservices and running them as functions. “People go too far too fast,” he said. “They think, it makes it really easy for me to run functions, let’s have a thousand of them. That way lies trouble.”

Further breaking down a microservice into separate functions might make sense, he said, but you can “hide that detail from the outside world… you might change your mind. You might decide to merge those functions back together again, or strip them further apart.”

The microservice should be a logical unit, he said, and FaaS an implementation detail.

Despite being an advocate of public cloud, Newman recognises non-technical concerns. “More power is being concentrated in a small number of hands,” he said. “Those are socio-economic concerns that we can have conversations about.”

Among all the Kubernetes hype, has serverless received too little attention? If you believe Newman, this is the case. The twist, perhaps, is that some serverless platforms actually run on Kubernetes, explicitly so in the case of Google’s platform