Given Singapore’s reputation for being an unabashed surveillance state, a passenger on a Singapore Airlines (SIA) flight could be forgiven for being a little paranoid.

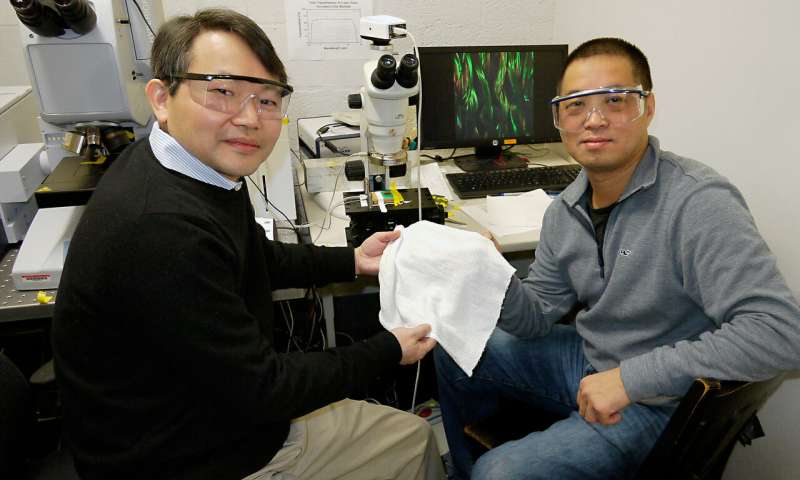

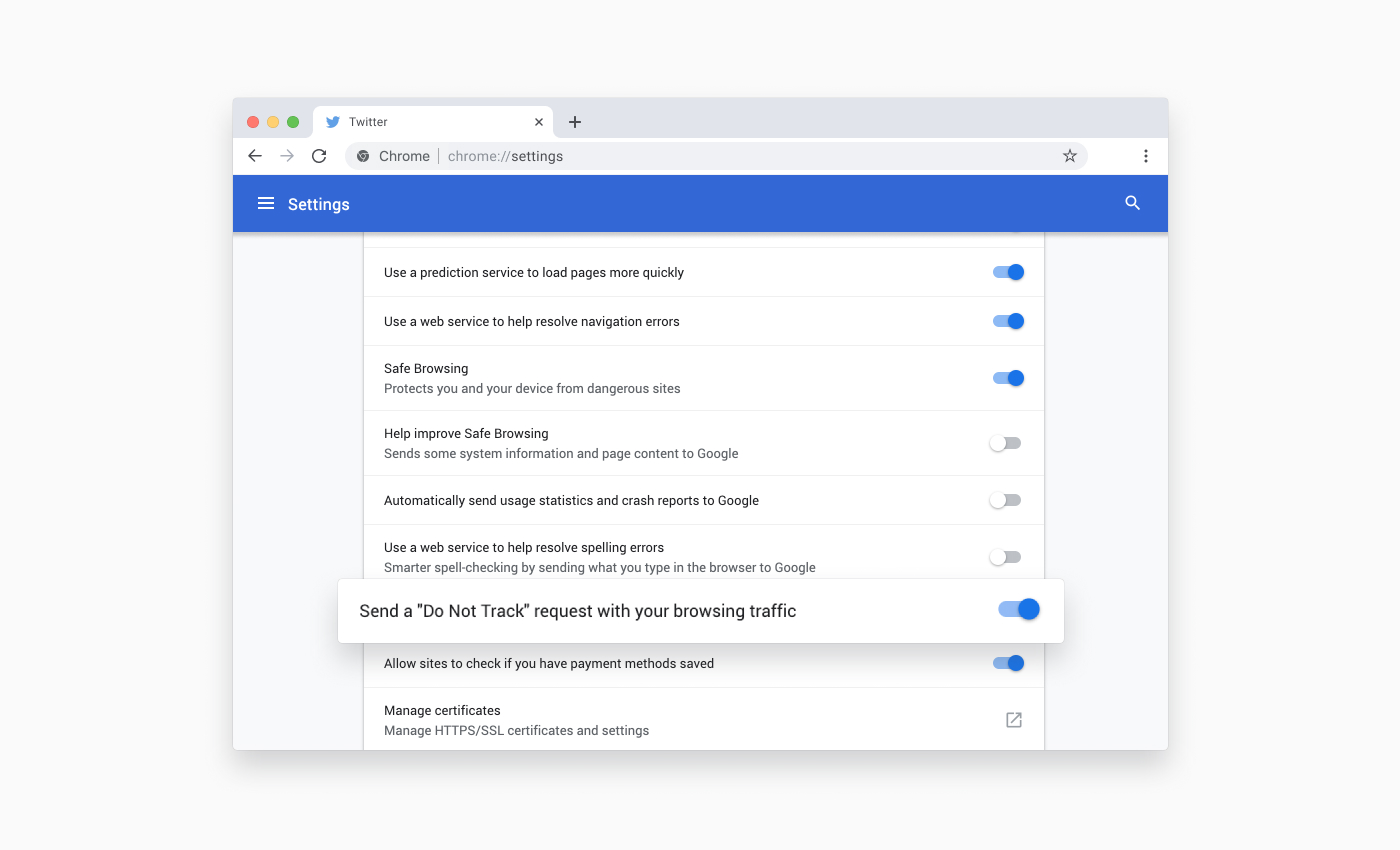

Vitaly Kamluk, an information security expert and a high-ranking executive of cybersecurity company Kaspersky Lab, went on Twitter with concerns about an embedded camera in SIA’s inflight entertainment systems. He tagged SIA in his post on Sunday, asking the airline to clarify how the camera is being used.

SIA quickly responded, telling Kamluk that the cameras have been disabled, with no plans to use them in the future. While not all of their devices sport the camera, SIA said that some of its newer inflight entertainment systems come with cameras embedded in the hardware. Left unexplained was how the camera-equipped entertainment systems had come to be purchased in the first place.

In another tweet, SIA affirmed that the cameras were already built in by the original equipment manufacturers in newer inflight entertainment systems.

Kamluk recommended that it’s best to disable the cameras physically — with stickers, for example — to provide better peace of mind.

Could cameras built into inflight entertainment systems actually be used as a feature though? It’s possible, according to Panasonic Avionics. Back in 2017, the inflight entertainment device developer mentioned that it was studying how eye tracking can be used for a better passenger experience. Cameras can be used for identity recognition on planes, which in turn, would allow for in-flight biometric payment (much like Face ID on Apple devices) and personalized services.

It’s a long shot, but SIA could actually utilize such systems in the future. The camera’s already there, anyway.

Source: Cybersecurity expert questions existence of embedded camera on SIA’s inflight entertainment systems