The latest release of antiX is Linux how it used to be, in the good way. It’s not the friendliest, but it does everything – and, wow, it’s fast.

The “proudly antifascist” antiX project has released its latest edition, based on Debian 12. This release is codenamed Arditi del Popolo – “the People’s Daring Ones” – after a 1920s Italian antifascist group formed to oppose Mussolini’s regime. antiX is not, as the name might imply, opposed to the X window system: its main editions are graphical, with a choice of environments (although there is a super-minimal, text-only edition if that’s what you want).

Instead, antiX seems to be opposed to pretty much all of the modern trends in desktop Linux, the sorts of technologies that old-timers often consider bloated or inefficient. It doesn’t use systemd or elogind. It doesn’t have Wayland, or heavyweight cross-distro packaging tools such as Flatpak or Snap. It doesn’t even have any of the standard desktop environments. By antiX standards, we suspect that a “desktop environment” would count as bloat.

(If you prefer a familiar desktop, then antiX 23 is one of the parent distros of MX Linux 23, which offers both Xfce and KDE variants.)

Instead of an integrated desktop, antiX provides a broad selection of tools that provide all the functionality of a desktop: app launchers, status monitors, wireless networking, file managers, whatever you need. Not only is it present, but you get a selection of alternatives, and in many cases there are both graphical and shell-based tools available. Despite all this, the 64-bit edition with kernel 6.1 still idles at under 200MB of memory in use, which is startlingly good for a 2023 distro. The Reg standard recommendation for a lightweight desktop Linux is the Raspberry Pi Desktop, which is based on Debian 11 and LXDE. antiX is built from newer components, but even so it uses less memory and it’s faster too.

So in a way, it reminds The Reg FOSS Desk of the good aspects of Linux the way it was in the 20th century. The full edition comes with lots of applications, including a few of the standard big names, such as Firefox ESR and LibreOffice. Aside from them, though, most are less well-known alternatives, ones that are smaller, faster, and take less memory.

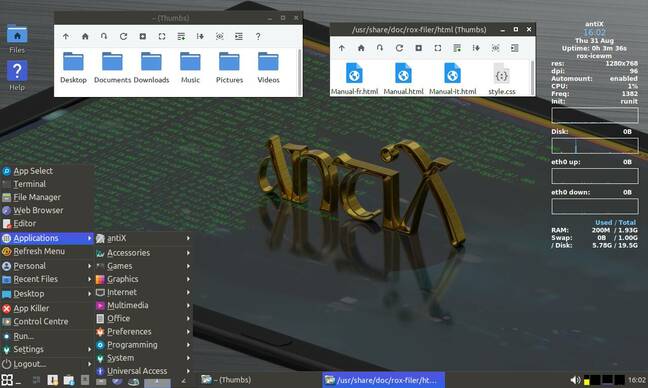

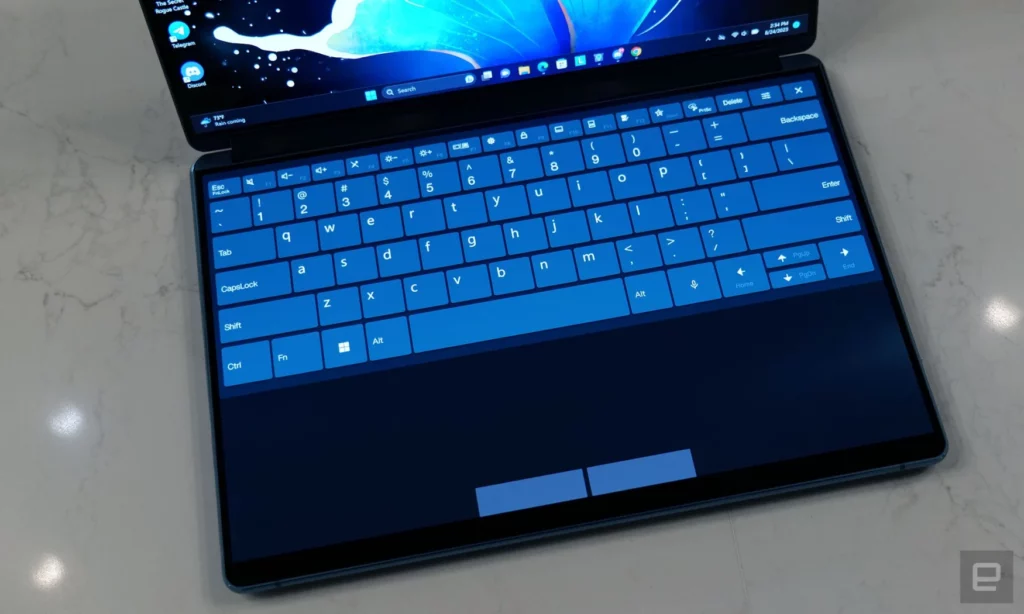

antiX 23 with IceWM and a couple of ROX Filer windows open. Looks like a desktop, works like a desktop – but faster

What’s missing are the bad parts. From modern Linux, the multiple huge, lumbering tools, all too often written in relatively sluggish interpreted programming languages, each of which pulls in a gigabyte of dependencies; and worse still, allegedly “local applications” which are actually web applets implemented in Javascript, so each tool drags an entire embedded web browser around with it. And from 1990s Linux, the rough edges: this is a modern distro, with modern hardware support, and the standard installation gives you a complete graphical environment with sound, networking and so on all pre-configured and working.

It stands in contrast to most other contemporary minimal distros such as Alpine Linux, Arch Linux or Void Linux, to pick some random examples. While these are all very capable distros, you must do a substantial amount of manual installation and configuration post-installation if you want a graphical desktop and the usual assortment of text editors, media players, communications tools, and so on. They also have their own idiosyncratic packaging tools etc. so to get started with customizing your new distro, you’ll probably have to spend some time on Google finding the commands and their syntax.

antiX is based on Debian, which, as we said when celebrating its 30th birthday recently, is the most widely used family of Linux distros there is – so it uses the familiar apt commands for managing software.

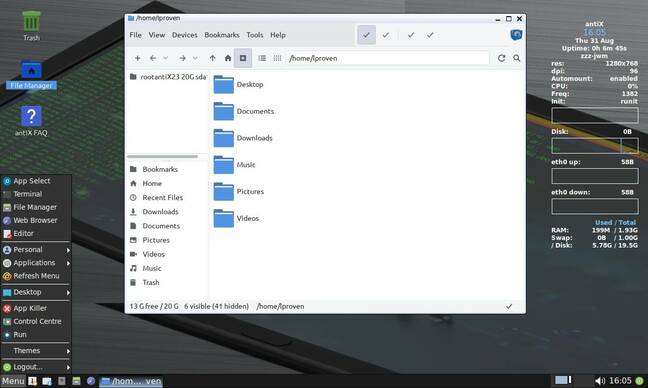

antiX 23 with JWM and the zzz file manager. It’s different, but not very. We’re not convinced it really needs both

So it’s a cut-down Debian “Bookworm”, with some of the controversial bits – such as systemd and the fancy desktop environments – taken out. You get a choice of two init systems: the default sysvinit or the more modern runit. These aren’t installation options, as they are in Devuan, say: you must choose and download the appropriate installation image. There are both 32-bit and 64-bit x86 editions.

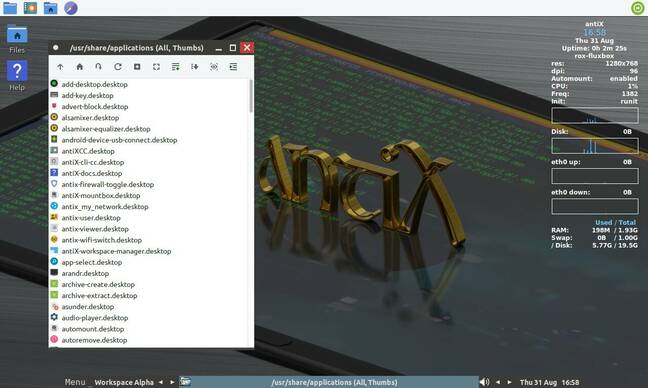

The full edition offers four window managers: IceWM, JWM, Fluxbox, and Herbsluftwm. IceWM offers a fairly rich Windows-like setup, with a taskbar, start menu, and some preconfigured system monitors and applets. JWM offers a more basic, no-frills version of the same layout. Fluxbox drops all that stuff for an even more minimalistic overlapping window manager. All include the Conky desktop status display. Finally, Herbsluftwm is an extremely minimal tiling window manager.

But the choices don’t end there. antiX also includes two different file managers, ROX Filer and zzz, both of which provide desktop icons and multi-folder-window style navigation. Optionally, ROX Filer has its own desktop panel too for an approximate simulation of RISC OS desktop, which means you get two different desktop panels.

There are also “minimal” login options, which don’t load a file manager. This means the (extremely basic) slimski login screen offers no less than 13 desktop options.

This is emblematic of the main issue with antiX: if anything, it offers too much choice. There are full, light, and minimal editions; sysvinit and runit editions; and i686 and x86-64 editions. There are over a dozen different combinations of window manager and file managers. The top-level app menu has 14 entries, with both a “Control Centre” and a “Settings” submenu. One of the menu entries is called “Applications” and contains the usual hierarchical list of apps, but some are also on the top level, and there’s a “Personal” menu where you can pin your favourites. This is accessible from the Start button analog in the two window managers which have one, and by right-clicking the desktop in all three which have a desktop. For all the main app categories – text editors, and web browsers, media players, and so on – there are multiple options, sometimes three or four of them.

Considering that this is one of the most lightweight Linux distros, it’s an embarrassment of riches. There are so many options, choices, themes, and settings, most of them with multiple ways to get at them, that even for an experienced user, it’s bewildering. There are even 16 different downloads on offer: Full, Base, Core, and Net, two init systems, and two CPU architectures.

The Fluxbox window manager, with its virtual desktop switcher control at the bottom, and ROX Session’s panel at the top. With some tweaking, it could be very like RISC OS

While with Alpine or Void, you can achieve an extremely lightweight, fully graphical desktop system, you must do this by installing and configuring most of it yourself. With antiX, to get to a setup you are happy with, you will still have to do quite a lot of custom configuration, but it will be removing tools that you don’t want. Of course, there are package management tools to help you do that: there’s Package Installer, and Program Remover, and Synaptic, and a menu-driven shell-based package manager, and of course apt – and apt-get and aptitude.

When you download, install, and boot antiX, it feels amazingly tiny and fast by modern standards. We have the older release 21 on our elderly Atom-based Sony Vaio P, and it makes that geriatric sub-netbook feel sprightly. Then you log in, start to browse the application menu, and find a Swiss army knife, where there’s a tool for everything. The trouble is, each blade unfolds to reveal another Swiss army knife. It’s almost fractal.

Back when Ubuntu first launched in 2004, it scored over Debian because someone had done the curation of programs for you. You got what was arguably the best completely FOSS desktop at the time, GNOME 2, and one best-of-breed app in each category of essential program – one web browser, one email client, one media player, and so on, all nicely set up and integrated into a harmonious whole. And when it started out, it was relatively slim and lightweight and fast. With Debian, you had to choose all this for yourself, which gives you great freedom, but requires considerable expertise, and the result might not feel very coherent and require quite some fine tuning. Now, both are pretty big, and these days Ubuntu offers a choice of 10 different desktop flavors, plus Server and Core and container images and more.

This is where MX Linux scores over this, its much smaller parent distro. The MX team does that curation for you. With antiX, you get the freedom to pick and choose from a profusion of tools, many of which you’ve probably never heard of and so wouldn’t know to install. But you will probably want to break out the hammer and chisel, and sculpt it down into something you find pleasing.

It’s a very interesting distro, if you know a bit of what you’re doing and want to learn and experiment and customize it. It’s also very lightweight in resource usage, and will run well on some ancient hardware that most modern distros won’t even attempt to boot on.

But we can’t help but feel that, as its name hints, it’s a bit anarchic. It feels designed by committee, where everyone got their choices included. Some judicious pruning and selection would really help buff it to a shine.